Like the rest of New Things Under the Sun, this article will be updated as the state of the academic literature evolves; you can read the latest version here.

The following is adapted from a paper I wrote for the OECD workshop on AI and the productivity of science (though this piece has nothing about AI in it!) No podcast this week - this piece has so many figures that it wasn’t working well. Happy Thanksgiving to American readers!

There is a common view among people interested in technological progress that the rate of progress slowed significantly between 1970 and 2020, as compared to 1920 to 1970 (though it might be picking up again in 2021). Today, I want to look at this question through the lens of one particular sector: American agriculture.

Agriculture? Really?

Perhaps surprisingly, agriculture is a good setting to study long-run changes in technological progress.

Agriculture has quite good data over a very long time period.

Agriculture is a technologically dynamic sector; there is a lot of change for us to study.

Agricultural products haven’t changed much, even if the way we produce them has. Corn is corn and the way they measured it 150 years ago is not that different from the way it’s measured now. In contrast, a car today is not the same thing as a car from 50 years ago (nor is a computer, phone, or building).

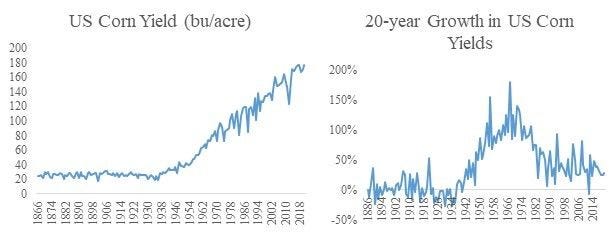

The following figure, which shows the annual number of corn bushels produced per year in the USA, illustrates all three of these virtues. It gives us more than 150 years of data, we see there have been big changes in the sector, and what it’s measuring isn’t controversial in the same way something like automobiles or, god forbid, GDP might be.

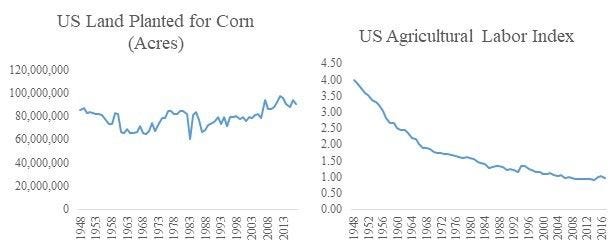

Two of the most important inputs to agricultural production, over the very long run, have been labor and land. And the enormous increase in agricultural output in the US has been sustained without any significant increase in these two inputs. Land use has stayed roughly flat, while labor has fallen dramatically. That suggests it’s technology that instead accounts for the big upswing in production.

To an economist, “technology” is the efficiency in which inputs are transformed into outputs. And by that definition, we can already derive a very crude measure of technological progress in agriculture using just this data. A common measure of technology in agriculture is simply yields, which can be thought of as the rate in which one input (land) is transformed into an output (for example, bushels of corn). Technological progress is the growth rate of this efficiency, and so a transparent and simple measure of technological progress in agriculture is just the growth rate of yields. However, since agricultural output fluctuates a lot due to weather (more on that soon), we need to focus on very long-run measures of progress, where year-to-year weather effects are small relative to the overall trend. The figure below plots average US corn yields on the left and the twenty year growth rate of those yields on the right. It clearly shows there has been a dramatic slowdown in the rate of technological progress by this measure (though note progress in yield growth today remains significantly higher than the stagnation that prevailed prior to 1940).

The advantage with measuring technological progress in agriculture with yields is that without much controversy, we can measure corn and land, and therefore yields and the change in yields. There’s not really any need for theory or assumptions. And that simple measures shows a sharp slowdown in yield growths, mostly (but not entirely) because growth has instead been constant in absolute terms (and therefore must be decreasing in exponential terms).

But simply looking at yields is also unsatisfying because it misses so many aspects of agricultural production. A lot goes into agriculture besides land and labor. For us to be really confident that technological progress is slowing, we would want to account for all those other inputs. And we would also need to rule out some alternative explanations - maybe technological progress is proceeding at the same clip as ever, but the climate or pest environment has deteriorated, and that’s why output hasn’t grown as fast, relative to inputs? So let’s turn to those issues now.

Many Inputs and Outputs

To study progress in the agricultural sector as a whole, we literally need a way to compare apples and oranges. Fortunately, economists have methods for aggregating baskets of goods over time by using data on prices and how those prices change. If we use those methods to construct a measure of total US agricultural output, for the period 1949 through the present, we get a picture that doesn’t look that different from the trends we see in corn production: total US agricultural output nearly tripled between 1949 and 2017.

The input side is trickier. Technological progress in agriculture has been instantiated by waves of new technological inputs, which are gradually adopted by a larger and larger share of farmers over time, as can be seen in in the figure below, from Pardey and Alston (2021).

To try and more accurately measure technological progress, we need to go beyond dividing one output (bushels of corn) by one input (acres), and move on to dividing an index of all outputs by an index of all inputs, appropriately adjusted for quality and the changing mix of inputs. The resulting analogue to yield is called total factor productivity or TFP (or sometimes multi-factor productivity).

Economic theory provides a framework for constructing these estimates. If you have a measure of all the different inputs used in production, under some standard assumptions, you can weight indices by their share of total costs and add them up to generate an aggregate index measure. A lot of methodological choices go into constructing these data series and adjusting inputs for their quality though and ultimately we cannot appeal to a simple and objective metric to check that our assumptions are right. These measures are ultimately theoretical constructs.

But US agriculture is fortunate in having two different teams of economists – one composed of government economists affiliated with the US Department of Agriculture, the other led by academics affiliated with the International Science and Technology Practice and Policy group (InSTEPP) – who have tackled this project using somewhat different methodologies. The extent to which the two different approaches converge on the same findings should give us some confidence.

As discussed in Fuglie, Clancy, Heisey, and MacDonald (2017), these two approaches agree in some respects and not in others. They substantially agree, for example on the question of how much output is produced by the US farm sector and the amount of labor used. They disagree more on the use of land. But unfortunately, they disagree quite significantly in their measurement of capital inputs – things like tractors and durable goods. This disagreement stems from divergent methodologies: the USDA backs out capital inputs from spending data and economic theory while InSTEPP attempts to do a physical inventory of capital used in farm production. The difference is particularly acute during the 1980s (when there was a farm crisis). This matters, because to calculate TFP we need to compare outputs to inputs, and if there is disagreement on inputs, there will also be disagreement on the ratio of outputs to inputs, or TFP. Accordingly, there has been a long back-and-forth between these groups about what exactly is happening to agricultural productivity.1 Fortunately, these data series are beginning to come to a consensus as the importance of the 1980s begins to recede into the rear view window.

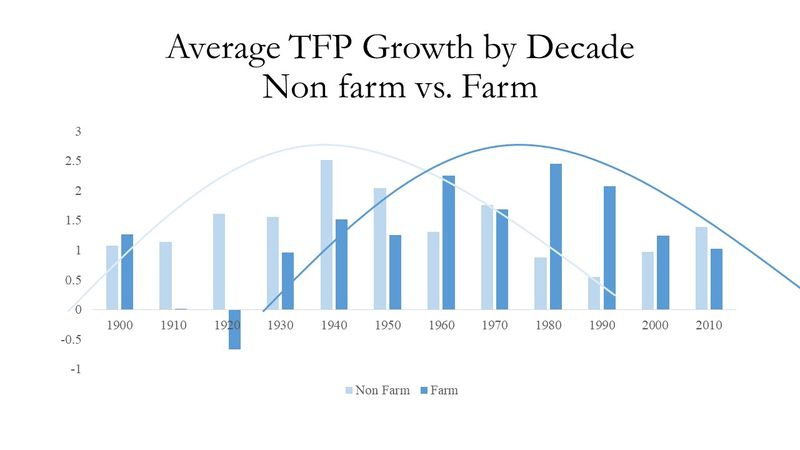

We can look at how the 20-year growth rate of TFP has changed over the very long run. In the figure below, I plot estimates from InSTEPP and the USDA for the 20-year growth rate of agricultural TFP, and for comparison, the 20-year growth rate of TFP for the entire US economy, so we can see how any technological stagnation in US agriculture stacks up to any stagnation in the total US economy. (Note: InSTEPP data runs through 2007, USDA data through 2017)

By either measure, the figure above clearly indicates that TFP growth in agriculture has indeed slowed. Notably, the magnitude of the decline in TFP is similar across all three series, though they differ in timing. Setting aside the 1980s, where there is some considerable disagreement between InSTEPP and the USDA, each series hangs around the 40-50% range in the first half of the series and ends in the 20-30% range. There is some significant disagreement about when this slowdown began, with InSTEPP showing declines beginning in the 1990s and USDA placing them in the 2000s.

In any event, the two different TFP estimates suggest a slowdown comparable to the slowdown in the entire US economy in the late twentieth century or early twenty-first, but with a later onset. That’s later than the yield data shows, which indicates it is important to consider other inputs, but in any event we still see a slowdown.

But are these figures really showing us anything about technological progress?

Don’t Forget Mother Nature

The trouble is, the efficiency at which we convert inputs into outputs can change for a variety of reasons, only some of which are what we would think of as “technological progress.” Specifically, in agriculture, phenomenon in the natural world also impact agricultural production. Of these factors, the potential impact of changing weather over the last several decades has been most studied. In a year with good weather, the same number of inputs will tend to generate more outputs. That will look like a year with good yields or higher than normal TFP, but it won’t mean technological progress has improved. And a year with bad weather will look like we have lower yields or TFP. Thus, a run of bad weather can also generate slowing TFP growth in the data, even if underlying technological progress is constant.

A lot of papers have tried to assess the effect of weather on our measurement of agricultural TFP in the USA. There are several approaches you can take.

Liang et al. (2017) look at how correlated year-to-year changes in the USDA TFP estimates are with annual weather across the USA, over the period 1951-2004. They find weather is important for explaining annual fluctuations in TFP (i.e., a year with exceptionally good weather is indeed a year when TFP is also measured to be unusually high), and that the correlation between weather and TFP has grown stronger over time as US agriculture has shifted production increasingly away from livestock and towards crops. However, on the whole, good years and bad years roughly offset each other, exerting only a tiny drag on TFP growth over the period, too small to explain the decline in TFP observed above.

Chambers and Pieralli (2020) take a different approach to estimating the impact of weather. Rather than examining how well correlated TFP is with weather, they essentially treat weather as another input, alongside land, labor and capital. They derive new measures of productivity that attempt to strip out weather effects. Their estimate also relies on state-level measure of TFP, rather than national, and they find that changing weather does, indeed, account for a large chunk of changing productivity at the level of individual states. But again, on the national level these changes largely wash out: some states enjoyed more favorable weather and some less, such that the net effect on US agriculture was again far too small to account for the slowdown in TFP growth.

Lastly (though we could do many more), Plastina, Lence, and Ortiz-Bobea (2021) statistically construct estimates of how many agricultural inputs would be used under conditions of “average” weather (for the period 1960-2004) and how much output would be produced under “average” weather as well. They can compare the change in TFP under this hypothetical scenario where weather was unchanging at average levels to what has actually been observed in order to assess the impact of weather on our estimates of total factor productivity. They also focus their efforts on state-by-state data, and again do not find worsening weather explains much about national TFP trends, at least through 2004.

All together, these results suggest worsening weather doesn’t explain the slowdown in yield or TFP growth in American agriculture. But it does suggest bad times ahead for US agriculture. While weather has not moved strongly enough in one direction over 1970-2004 to account for the data we observe, it does exert a powerful influence on agriculture. And that weather is expected to worsen over the twenty-first century, as a consequence of climate change. So even if a slowdown in TFP growth thusfar can’t be blamed on the weather, going forward it’s likely to pose a real headwind to agricultural productivity growth.

(One potentially important caveat: the slowdown in agricultural TFP that see in the USDA series mostly dates to the period after 2004, when these studies run out of state-level data. If US weather has changed a lot since 2004, it could be a factor in explaining the decline in TFP since then; but my understanding is that there has been no major discontinuity since then - see, for example this (figure 3) and this. But correct me if I’m wrong and I can update!)

Climate change is also related to the second possible phenomena from the natural world that might affect long-run productivity of American agriculture. It has long been known that CO2 is potentially beneficial for crops and that rising atmospheric CO2 over the twentieth century may have augmented yield growth. But how big is this effect?

Taylor and Schlenker (2021) attempt to infer the impact of CO2 on US crop yields for corn, wheat, and soy, by exploiting new highly specific spatial data on CO2 levels across the US for the period 2010 to 2018. Since CO2 diffuses over a matter of weeks or even months, there is some meaningful variation in the exposure to CO2 during the growing season of US crops. They use a variety of statistical tricks to try and tease out these effects, estimating as much of 10% of corn yield improvements since 1940 could be attributed to rising CO2, and as much as 40% of wheat yield improvements. However, other papers, using approaches where the CO2 level for study plots is experimentally varied, instead of trying to exploit natural variations, find significantly smaller effects (Kimball 2016). In any event, it seems clear that rising CO2 levels will tend to boost crop yields (though the magnitude of the effect is unclear), and since CO2 levels continued to rise through the period of TFP and yield growth slowdowns, if anything this is another reason to believe technological progress in US agriculture may have been even slower than the data suggests.

A final point to consider is the impact of the biological pests on agricultural production, such as diseases, weeds, and insects. If the pest burden on US agriculture were to increase significantly in the last part of the period examined, that could also potentially explain declining TFP growth, without any need to resort to slowing technological progress. This would manifest in reduced output for a constant set of inputs, or greater use of inputs (such as pesticides) to maintain the same level of output, or some combination of the two. Indeed, increased herbicide resistance has led to a rise in the use of herbicides in agriculture since 2010 (Hellerstein et al. 2019, chapter 2.8). However, this impact is also too small to account for the decline in TFP growth over the periods observed, since herbicide use is too small a share of total inputs. Again, alternative explanations for the data don’t seem to work. We’re left with the conclusion that technological progress has likely slowed.

Why?

So if technological progress in American agriculture has slowed, why might that be? One major candidate for the explanation is the stagnation in agricultural R&D that has prevailed from roughly 1980 to 2007.

As discussed at great length here, a constant level of R&D effort tends to result in smaller and smaller gains in innovation. So if that’s true, the roughly stagnant R&D would be expected to result in a slower and slower rate of technological progress. Moreover, as discussed here, the lag between basic science and technological application is often on the order of twenty years. So it should not be surprising that stagnant R&D would lead to a slowdown in productivity growth whose onset began twenty years later, which is roughly what the USDA data shows.

That said, I wonder if there isn’t a second factor as well. I think this figure, which we already looked at once, is suggestive. Note the slowdown came decades after a slowdown in the wider US economy. Maybe what’s happening in American agriculture is an echo of what happened earlier to the economy at large?

And it turns out, we can glimpse something like this kind of “echo” on the upside as well. Alston and Pardey (2021) come up with some estimates of the decade-by-decade growth rate of productivity in the farm and non-farm economy, going back to the 1890s. Very roughly speaking, a few decades after an upswing in the productivity of the non-farm economy, we also saw an upswing in the productivity of the farm economy.

And we have other reasons to think the dynamics of technological progress in agriculture might track dynamics in the wider economy, with an echo. Clancy, Heisey, Ji, and Moschini (2021) showed that patented agricultural technologies rely quite heavily on ideas and knowledge developed outside of agriculture. Many kinds of agricultural technology patents are more likely to cite non-agricultural patents than agricultural ones, non-agricultural journals than agricultural ones, and to borrow rather than originate novel technological words and phrases in their text (this paper is discussed a bit more here).

So maybe the slowdown in technological progress in agriculture is also partially explained by a slowdown in progress outside of agriculture, since that meant there were fewer new ideas to adapt for agriculture? In fact, to get even more speculative, maybe the stagnation in agricultural R&D since 1980 is partially due to a lack of exciting new avenues for doing agricultural R&D?

But hold on a second; surely agriculture is not the only sector that heavily borrows from other fields. Every industry is small relative to the rest of the economy. But it wouldn’t make sense to therefore predict that every sector’s path of technological progress follows the path of the rest of the economy’s but lagged by a few decades. Everyone can’t be a follower; somebody has to lead!

But I think we have a few reasons to think agriculture is a bit unusual here. Agriculture, after all, is rural and innovations tend to disproportionately emerge in cities and diffuse slowly out. Moreover, it’s not like learning about an innovation instantly translates into productivity gains. Pardey and Alston (2021) argue one reason productivity gains in agriculture lag those in the non-farm economy is because it is also necessary for a process of economic reorganization to take place in order to reap the benefits of these new technologies. For example, if technology favors larger farms, there will be a protracted period of farm consolidation (which we have in fact observed). Of course, other sectors also need to reorganize to best take advantage of technologies that originate elsewhere, but agriculture may be particularly slow to undergo these painful transitions, given how intertwined it is with family, culture, and place.

If we buy this story, then it may be the slowdown in technological progress in American agriculture is actually just another way of looking at a broader slowdown in technological progress. It’s like inferring the shape of an object from its shadow.

Thanks for reading! If you liked this, you might also enjoy this article arguing that innovation has tended to get harder, or this looking at the importance of ideas generated elsewhere. For other related articles by me, follow the links listed at the bottom of the article’s page on New Things Under the Sun (.com). And to keep up with what’s new on the site, of course…

See Alston, Beddow and Pardey 2008, Fuglie 2008, Alston et al. 2010, Ball, Schimmelpfenning, and Wang 2013, Wang et al. 2015, and Andersen et al. 2018

I think it’s becoming clear to anyone seriously analyzing technological progress that is has been slowing across most fields. I think two things obfuscate this: 1) The last 50 years of extremely rapid computational growth and the benefits that provides across industries has been dramatic. 2) There is no shortage of hype and promises to fuel a feeling of ever accelerating technology change.

However with respect to number one it’s clear that Moore’s law has been slowing and started really slowing down around 2010. As a lead technologist in a computer company, I tracked Moore’s law very closely for decades and believe me, the slow down was clear by every measure: computation per dollar, computation per watt, storage cost per dollar, etc…

With Moore’s Law slowing down that is essentially the last big technology Leap of the last 200 years.

There are so many examples to choose from it’s hard to know where to start but let’s just put it this way: we haven’t discovered any new usable atoms on the periodic table for a long time. This is why material science is fairly stuck despite all the hype about nano this or carbon that. Just at Elon musk‘s fancy new rocket ship… what is it using for fuel? liquid oxygen and kerosene or methane? What is it made out of? Aluminum and stainless steel! Hmmm… Not too different than what was used in World War II for the V2 and in the 70’s for Apollo.

How about some of the most advanced aluminum alloys. Did you know that one of them, that is still considered fairly state of the art, was first used in 1938 on the Mitsubishi zero fighter? That’s 84 years ago? Yes they have gotten cheaper and now you can find them on common products, I agree, but there is no new aluminum alloy that is 3, 5, or 10 times stronger than there was 80 years ago.

Look at the efficiency of steam engines that convert heat into electricity such as used in our power plants. The first Newcomb engine was about 0.5-1 percent efficient, then James Watt created an engine that was 5% efficient now they’re 40 to 50% efficient. That is a growth of 50-100 times and since you can’t get more than 100% efficient so there’s only 2X more to go.

The math is inescapable, we have simply squeezed most the blood out of most of the turnips. There simply isn’t a shelf full of Star Trek and Star Wars technologies ready to be quickly invented.

On top of that, some of the disciplines are getting so complex that it’s beyond the capabilities of one person, one very intelligent person even, to make significant progress. The days of one Thomas Edison and a small team whipping out 10 or 15 inventions in a few years that shake up the world are long gone. We have today are large teams of investigators in large institutions working for years to make small incremental advantages in one small area.

I am not sure whether Substack notified you, but I cross-posted this article earlier this week. It is part of my series of cross-posts on what I believe are the best progress-related articles on Substack.