Getting an academic field to change its ways is hard. Independent scientists can’t just go rogue - they need research funding, they need to publish, and they need (or want) tenure. All those things require convincing a community of your peers to accept the merit of your research agenda. And yet, humans are humans. They can be biased. And if they are biased towards the status quo, it can be hard for change to take root.

But it does happen. And I think changes in the field of economics are a good illustration of some of the dynamics that make that possible.

The Applied Turn in Economics

In 1983, economist Edward Leamer, writing about economics, said:

Hardly anyone takes data analysis seriously. Or perhaps more accurately, hardly anyone takes anyone else’s data analysis seriously.

But in subsequent decades, economics made a famous “applied turn.” The share of empirical papers in three top journals rose from a low of 38% in the year of Leamer’s paper to 72% by 2011! The field also began giving its top awards - the economics Nobel and the John Bates Clark award for best economist under 40 - to empirical economists. These new empirical papers were strongly characterized by careful attention to credibly detecting causation (rather than mere correlation).

This applied turn seems to have worked out pretty well. In 2010, Joshua Angrist and Jorn-Steffen Pischke wrote a popular follow-up to Leamer’s article titled The Credibility Revolution in Empirical Economics: How Better Research Design is Taking the Con out of Econometrics. The title is an apt summary of the argument. More quantitatively, a 2020 paper by Angrist et al. tried to assess how this empirical work is received in and outside of economics, by looking at citations to different kinds of articles. Consistent with Leamer’s complaints, when looking at total citations received by papers, Agrist et al. find empirical papers faced a significant citation penalty prior to the mid 1990s.

When they restrict their attention to citations of economics papers by non-econ social science papers, they find empirical papers now benefit from a citation premium, especially since the 2000s. This suggests the applied turn has been viewed favorably, even by academics who are outside of economics.

Elsewhere, Alvaro de Menard, a participant in an experiment to see if betting markets could predict whether papers will replicate, produced this figure summarizing the market’s views on the likelihood of replication for different fields (higher is more likely to replicate).

Among the social sciences, economics is perceived to be most likely to replicate (though note the mean likelihood of replication is still below 70%!). I should also add at this point, that if you are a skeptic about the validity of modern economics research, I think you can still get a lot out of the rest of this post, since it’s about how a field comes to embrace new methods, not so much whether those new methods “really” are better.

So. How did this remarkable turn-around happen?

Changing a Field is Hard

Changing a field is hard. Advancing in academia is all about convincing your peers that you do good work. The people you need to convince are humans and humans are biased. In particular, they may be more or less biased towards the methods and frameworks that are already prevalent in the field.

Bias might reflect the cynical attitudes of researchers who have given up on advancing truth and knowledge, and just want to protect their turf. But bias can also emerge from other motives. Maybe biased researchers simply haven’t been trained in the new method and don’t appreciate its value. Or maybe they choose to value different aspects of methods, like interpretability over rigor. Or maybe there really is disagreement over the value of a new method - even today there is lots of debate about the proper role of experimental methods in economics. Lastly, it may just be that people subconsciously evaluate their own arguments more favorably than those advanced by other people.

Akerlof and Michaillat have a nice little 2018 paper on these dynamics that shows how even a bit of bias can keep a field stuck in a bad paradigm. Suppose there are two paradigms, an old one and a new (and better) one. How does this new paradigm take root and spread? Assume scientists are trained in one paradigm or the other and then have to convince their peers that their work is good enough to get tenure. If they get tenure, they go on to train the next generation of scientists in their paradigm.

Research is risky and whatever paradigm they are from, it’s uncertain whether they’ll get tenure. The probability they get tenure depends on the paradigm of the person evaluating them (for simplicity, let’s just assume every untenured scientist is evaluated by just one evaluator).

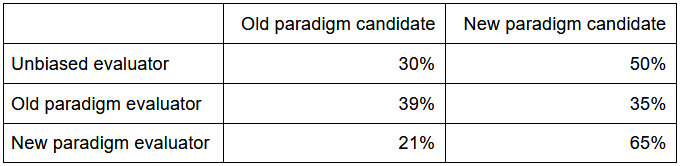

The probability a candidate gets tenure is...

In this example, the new paradigm is better, in the sense that an unbiased evaluator would give one of its adherents tenure more often than someone trained in the old paradigm (50% of the time, versus 30%). But in this model, people are biased. Not 100% biased, in the sense that they will only accept work done in their own paradigm. Instead, they are 30% biased: they give a 30% penalty to anyone from the other paradigm and a 30% bonus to anyone from their own paradigm. This means the old paradigm evaluators still favor candidates from their own field, but not by much (39% versus 35%). On the other hand, the new paradigm people are also biased, and since their paradigm really is better, the two effects compound and they are much more likely to grant tenure to people in their own paradigm (65% vs 21%).

What’s the fate of the new paradigm? It depends.

Suppose the new paradigm has only been embraced by 5% of the old guard, and 5% of the untenured scientists. If scientists cannot choose who evaluates them, and instead get matched with a random older scientist to serve as an evaluator then in the first generation:

95% of the new paradigm scientists are evaluated by old paradigm scientists, and only 35% of them are granted tenure

5% of the new paradigm scientists are evaluated by new paradigm scientists and 65% of them get tenure

95% of the old paradigm scientists are evaluated by old paradigm-ers, and 39% of them get tenure

5% of the old paradigm scientists are evaluated by new paradigm-ers and only 21% of these get tenure.

Thus, of the original 5% of untenured scientists in the new paradigm, only 1.8% get tenure (5% x (0.95 x 0.35 + 0.05 x 0.65)) and of the original 95% of untenured scientists in the old paradigm, 36.2% get tenure (95% x (0.95 x 0.39 + 0.05 x 0.21)). All told, 38% of the untenured scientists get tenure. They become the new “old guard” who will train and then evaluate the next generation of scientists.

In this second generation of tenured scientists, 4.8% belong to the new paradigm and 95.2% belong to the old paradigm. That is, the share of scientists in the new paradigm has shrunk. If you repeat this exercise starting with 4.8% of scientists in the new paradigm, the new paradigm fares even worse, because they are less likely to receive favorable evaluators than the previous generation. Their share shrinks to 4.6% in the third generation; then 4.4% in the fourth; and so on, down to less than 0.1% of the population by the 25th generation.

Escaping the Trap

In contrast, if the share of scientists adopting the new paradigm had been a bit bigger - 10% instead of 5%, for example - then in every generation the share of new paradigm scientists would get a bit bigger. That would make it progressively easier to get tenure, if you are a new paradigm scientist since it gets increasingly likely you’ll be evaluated by someone favorable to your methods.

Akerlof and Michaillat show that if a new paradigm is going to take root, it needs to get itself above a certain threshold. In the example I just gave, the threshold is 8.3%. In general, this threshold is determined by just two factors: the superiority of the new paradigm and the extent of bias. The better the paradigm and less bias, the lower is this threshold and therefore the lower is the population of scientists that needs to be “converted” to the new paradigm for it to take root.

The applied turn in economics looks reasonably good on these two factors.

Let’s talk about the new paradigm’s superiority first. Here, we are talking about the extent to which the new paradigm really is better than the old one, as judged by an unbiased evaluator. In general, the harder it is to deny the new paradigm is better, the better the outlook of the new paradigm. In my example above, if the new paradigm earns tenure from an unbiased evaluator 55% of the time instead of 50%, this is enough to outweigh the 30% bias penalty. The new paradigm will grow in each generation.

One way to establish a paradigm’s superiority is if it is able to answer questions or anomalies that the prior paradigm failed to answer (this is closely related to Kuhn’s classic model of paradigms in science). Arguably, this was the case with the new quasiexperimental and experimental methods in economics. As retold in Angrist and Pischke’s “The Credibility Revolution” a 1986 paper by Lalond was quite influential.

Lalond had data on an actual economic experiment: in the mid-1970s, a national job training program was randomly given to some qualified applicants and not to others (who were left to fend for themselves). Comparing outcomes in the treated and control groups indicated the program raised incomes by a bit under $900 in 1979. In contrast, using economics’ older methods produced highly variable results with some estimates off by more than $1,000 in either direction.

The second factor that matters in Akerlof and Michaillat is the extent of bias. If the bias in this example was 20% instead of 30%, the new paradigm would gain more converts in each generation, further raising the tenure prospects for new paradigm-ers.

I suspect certain elements of quasiexperimental methods also helped reduce the bias these methods faced when they were taking root in economics. It’s probably important here that economics has a long history of thinking of experiments as an ideal but regrettably unattainable method. When some people showed that these methods were indeed usable, even in an economic context, it was easier to accept them than if the field had adopted an attitude of not valuing experiments even if they were valuable. Moreover, some quasi-experimental ideas could easily be understood in terms of long-standing empirical approaches in economics (like differentiating between exogenous and endogenous variables).

So, given all that, the applied turn in economics probably had a relatively low bar to clear. But is that the whole story? I don’t think so, for two reasons.

First, this version of the story is really flattering to economists. Basically, it says the applied turn happened because economists were not that biased against this kind of paradigm shift, and because good work convinced them of it’s value. But we should be skeptical towards explanations that are flattering to our self-image.

Second, even supposing the threshold economics needed to clear was a small one, supposing there was still a bar to clear at all, how did economics get above it? In any new paradigm, there will tend to be a very small number of people who initially adopt it. How does this microscopic group get above the threshold?

One possibility is simple luck. This is a bit obscured in my presentation; the dynamics I describe are only the averages. The new paradigm lot could get lucky and be assigned to a greater share of new paradigm tenured evaluators than the average, or they might catch a few breaks from old paradigm evaluators. If a bit of luck pushes them over a crucial threshold (in this case, 8.3%), then they can expect to keep growing in each generation. Luck is especially important when fields are small.

In economics, the important role of a single economist, Orley Ashenfelter, is often highlighted. Panhas and Singleton’s wrote a short history of the rise of experimental methods in economics, noting:

...Ashenfelter eventually returned to a faculty position at Princeton. In addition to his promoting the use of quasi-experimental methods in economics through both his research and his role as editor of the American Economic Review beginning in 1985, he also advised some of the most influential practitioners who followed in this tradition, including 1995 John Bates Clark Medal winner David Card and, another of the foremost promoters of quasi-experimental methods, Joshua Angrist.

We will return to Ashenfelter in a bit. But if he had not been in this position at this time (editor of the flagship journal in economics and an enthusiastic proponent of the new methods), it’s possible that things might have turned out differently for the applied turn.

All of these factors are probably part of the story of the applied turn in economics. But a 2020 preprint by O’Conner and Smaldino suggests a fourth factor that I think turned out to also be very important.

O’Connor and Smaldino suggest interdisciplinarity can also be an avenue for new paradigms to take root in a field. The intuition is simple: start with a model like Akerlof and Michaillat’s, but assume there is also some probability that you will be evaluated by someone from another field. This doesn’t have to be a tenure decision - it could also represent peer review from outside your discipline that helps build a portfolio of published research.

If that other field has embraced the new paradigm, then this introduces a new penalty for those using the old paradigm, since they will get dinged anytime they are reviewed by an outsider, and it raises the payoff to adopting the new paradigm since they benefit anytime an outsider reviews them. If we add a 10% probability that you are evaluated by an evaluator from another field that has completely embraced the new paradigm, this is also enough to ensure the share of new paradigm scientists grows in each period.

Outside Reviewers in Economics

At first, this wouldn’t seem to be relevant. Economics has a reputation for being highly insular, with publication in one of five top economics journals a prerequisite for tenure in many departments. When Leamer was writing in 1983, well under 5% of citations made in economics articles were made to other social science journals (which compares unfavorably to other social sciences). Even if there was another field using the same quasi-experimental methods as would later become popular in economics, they weren’t going to be serving as reviewers for many economics articles.

But economics is unusual in having a relatively large non-academic audience for its work: the policy world. Economists testify before congress at twice the rate of all other social scientists combined and this was especially true in the period Leamer was writing. I think policy-makers of various stripes played a role analogous to the one O’Connor and Smaldino argue can be played by other academic disciplines.

The quasi-experimental methods that came to prominence in the applied turn began in applied microeconomics, more specifically in the world of policy evaluation. Policy-makers had a need to evaluate different policies, and experimental methods were viewed as more credible by this group than existing alternatives (such as assuming a model and then estimating its parameters). In 2017, Panhas and Singleton wrote a short history of the rise of experimental methods in economics, highlighting these roots in the world of policy evaluation:

The late 1960s and early 1970s saw field experiments gain a new role in social policy, with a series of income maintenance (or negative income tax) experiments conducted by the US federal government. The New Jersey experiment from 1968 to 1972 “was the first large-scale attempt to test a policy initiative by randomly assigning individuals to alternative programs” (Munnell 1986). Another touchstone is the RAND Health Insurance Experiment, started in 1971, which lasted for fifteen years.

This takes us back to Orley Ashenfelter. Upon graduating with a PhD from Princeton in 1970, Ashenfelter was appointed director of the Office of Evaluation at the US Department of Labor. As Panhas and Singleton write:

[Ashenfelter] recalled about his time on the project using difference-in-differences to evaluate job training that “a key reason why this procedure was so attractive to a bureaucrat in Washington, D.C., was that it was a transparent method that did not require elaborate explanation and was therefore an extremely credible way to report the results of what, in fact, was a complicated and difficult study” (Ashenfelter 2014, 576). He continued: “It was meant, in short, not to be a method, but instead a way to display the results of a complex data analysis in a transparent and credible fashion.” Thus, as policymakers demanded evaluations of government programs, the quasi-experimental toolkit became an appealing (and low cost) way to provide simple yet satisfying answers to pressing questions.

This wasn’t the only place that the economics profession turned to quasi-experimental methods to satisfy skeptical policy-makers. In another overview of the applied turn in economics, Backhouse and Cherrier write:

...in 1981 Reagan made plans to slash the Social Sciences NSF budget by 75 percent, forcing economists to spell out the social and policy benefits of their work more clearly. Lobbying was intense and difficult. Kyu Sang Lee (2016) relates how the market organization working group, led by Stanley Reiter, singled out a recent experiment involving the Walker mechanism for allocation of a public good as the most promising example of policy-relevant economic research.

So it seems at least part of the success of the applied turn in economics was the existence of a group outside of the academic field of economics who favored work in the new “paradigm” and that this allowed the methods to get a critical toehold in the academic realm.

Through the 1990s and 2000s, the share of articles using quasi-experimental terms rose, led by applied microeconomics. But by the 2010s, the experimental method also began to rise rapidly in another field: economic development. This too was a story about economics’ contact with the policy world, albeit a different set of policy-makers.

Economic Development and the Rise of the RCT

de Souza Leão and Eyal (2019) also argue the rise of a key new method in development economics - the randomized control trial (RCT) - was not the inevitable result of the method’s inherent superiority. They point out that the current enthusiasm for RCTs in international development is actually the second such wave of enthusiasm, after an earlier wave dissipated in the early 1980s.

The first wave of RCTs in international development occurred in the 1960s-1980s, and was largely led by large government funders engaging in multi-year evaluations of big projects or agencies. In this first wave, experimental work was not in fashion in academic economics, and experiments were instead run primarily by public health, medicine, and other social scientists, as well as non-academics. Enthusiasm for this approach waned for a variety of reasons. The length and scale of the projects heightened concerns about the ethics of giving some groups access to a potentially beneficial program and not others. Moreover, this criticism was particularly acute when directed at government funders, who are necessarily responsive to political considerations, and who it could be argued have a special duty to provide universal access to potentially beneficial programs. The upshot is a lot of experiments were not run as initially planned (after political interference), and then a lot of time and money had been spent on evaluations that weren’t that informative.

The second wave, on the other hand, shows no sign of slowing down. The second wave of RCTs in international development began after the international development community fragmented after the breakdown of the Washington consensus. International NGOs and philanthropists were a new source of funding for experiments. Compared to governments, international NGOs and philanthropists were more insulated from the arguments about the ethics of offering an intervention to only part of a population. They did not claim to represent the general will of the population and budget constraints usually meant that universal access was infeasible anyway. Moreover, the nature of these interventions tended to be shorter and smaller, which also tended to blunt the argument that experiments were unethical. (Though critiques of the ethics of RCTs in economic remain quite common)

On the economist’s side, appetite for using RCT methods had become somewhat established by this time, thanks to its start in applied microeconomics. Moreover, economists were content to study small or even micro interventions, because of a belief that economic theory provided a framework that would allow a large number of small experiments to add up to more than the sum of their parts. Whereas the first wave of RCTs was conducted by a wide variety of different academic disciplines, this second wave is dominated by economists. This also creates a critical mass in the field, where economists using RCTs can be confident their work will find a sympathetic audience from their peers.

How to Change a Field

So, to sum up, it’s hard to change a field if people are biased against that change since any change necessarily has a small number of adherents at the outset. One way change can happen though, is if an outside group sympathetic to the innovation provides space for its adherents to grow. Above a threshold, the change can be self-perpetuating. In economics, the rise of quasi-experimental methods in the field probably arises partially from the presence of the policy world, which liked these methods and allowed them to take root. It was also important, however, that these methods credibly established their utility in a few key circumstances, and also that they could be framed in such a way that was consistent with earlier work.

The next newsletter (February 2) will be about one reason cities are so innovative: because they create encounters between people who would not normally meet.

If you enjoyed this post, you might also enjoy:

Does science self-correct? (evidence from retractions)

Does chasing citations lead to bad science? (sometimes yes, in general no)

How bad is publish-or-perish for the quality of science? (it’s not great)