Citation is the currency of science. New scientific discoveries build on the work that came before, and scientists “purchase” the support of that earlier work by citing it. In turn, scientists hope their own work will prove useful to others and be cited in turn. The number of citations received over a lifetime becomes a proxy for how well the scientific community values your work, and that recognition is what scientists value.

At least, that’s a simplified version of the story David Hull tells in Science as a Process: An Evolutionary Account of the Social and Conceptual Development of Science. It’s an elegant story. The pursuit of citations directs effort towards problems whose solutions will be most useful to the production of new knowledge, and incentivizes scientists to promptly disclose their results and make them accessible. When it works, the system accelerates discovery.

But it can also go wrong. It’s a self-referential system: what’s valuable is what gets cited, and what gets cited is what helps you generate citations. If your discipline has a lot of people debating how many angels can dance on the head of a pin, then debating that topic becomes the way you get citations. In short, if citations are the currency of science, then the citation market is one where bubbles are theoretically possible.

That’s essentially the charge leveled against theoretical fundamental physics in Sabine Hossenfelder’s Lost in Math: How Beauty Leads Physics Astray. Hossenfelder argues that theoretical fundamental physics is stuck in a quagmire because the field has become so far removed from experimental testing that aesthetic criteria now guide theory development. Beautiful work is what gets cited, and so people work hard to develop beautiful theories, whether or not they’re true.

Similar charges have been leveled against the field of economics. In a common version of the argument, it’s an insular field obsessed with writing complicated mathematical models based on a caricature of human psychology, with the purpose of “proving” the free market is best. But if this is how academic economics really works, then to get citations in economics you need to play along, whether or not it’s true.

Angrist et al. (2020) test this story by looking at how economics research is cited by other fields. If economics research doesn’t point towards any universal truths, it’s less likely to be useful to other fields - in psychology, sociology, political science, computer science, statistics, etc. - who do not share economics’ supposed ideological slant. To check this idea, they allocate journals to different disciplines, based on how frequently the articles they publish are cited by flagship field journals. They then look at the share of citations those articles make to economics and other social sciences.

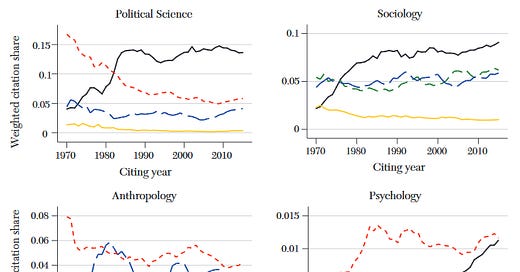

(Here’s an example: the weighted share of citations from different social sciences, to economics (in black), sociology (in red), and other fields)

Fortunately, they find the utility of economics research to other fields has been improving and in many cases looks pretty favorable relative to other social sciences. For example, political science and sociology articles are more likely to cite economics research than other social sciences, as are articles in finance, accounting, statistics, and mathematics. In psychology and computer science, economics in recent years is more or less tied for first with another social sciences field. In other fields, citations to economics are lower than other social sciences, but it would be surprising if that weren’t the case.

Even more encouragingly, it looks like chasing in citations in economics is at least partially aligned with producing work that is useful outside the field. Angrist and coauthors run a regression predicting citations from outside economics to a particular economics article as a function of article characteristics, including the number of citation an article receives from other economics papers. A 10% increase in citations from economics papers is associated with a 6% increase in citations from outside economics. Papers that get cited a lot in economics are also more likely to get cited by non-economic fields.

So the citation market in economics is not purely a bubble. But what about science more generally?

Poege et al. (2019) provide a useful check here. They look at the value of patents that cite scientific work and compare the value of the patents to the number of citations the cited article received from other articles (i.e., not from patents).

Patents are not applied for to earn citations, but rather to turn a profit. So patent-holders aren’t playing the citation game. If the citation market in science is a bubble, then ivory tower academics chase citations by arguing how many angels can dance on the head of a pin (or whatever the current fad is). Sometimes that generates useful knowledge, but the number of citations an article receives is basically unrelated to how useful the ideas are to those outside the ivory tower. In contrast, if the citation market is functioning well, it directs scientists to discover universal truths that can be generally applicable. In that case, highly cited work is more likely to be useful to those trying to invent new technologies.

Fortunately, Poege and coauthors find the most highly cited scientific papers are indeed more likely to be cited by patents.

(In the figure above, science quality is an article’s percentile rank for citations received in the three years after publication)

Moreover, patents that cite highly cited papers are themselves more valuable by various measures (such as how often the patent is cited, or how the stock price of the patent-holder changes when the patent is granted).

And patents that do not directly cite highly cited research, but do cite patents that cite such research, are also more valuable than patents with no citation chain connecting them to highly cited scientific research.

There is much more that can be said about how well the citation system works or doesn’t in science (I plan to write more later). But these two studies appear to largely rule out the worst case scenario: the citation system seems to direct research towards useful ends, where usefulness is defined by utility to those outside the immediate research community.