Steering Science with Prizes

Public Honors as Coordination Devices

Like the rest of New Things Under the Sun, this article will be updated as the state of the academic literature evolves; you can read the latest version here.

Audio versions of this and other posts: Substack, Apple, Spotify, Google, Amazon, Stitcher.

Finally, as part of the partnership with the Institute for Progress, the fine folks at And Now have designed a new logo for New Things Under the Sun:

New scientific research topics can sometimes face a chicken-and-egg problem. Professional success requires a critical mass of scholars to be active in a field, so that they can serve as open-minded peer reviewers and can validate (or at least cite!) new discoveries. Without that critical mass,1 working on a new topic topic might be professionally risky. But if everyone thinks this way, then how do new research topics emerge? After all, there is usually no shortage of interesting new things to work on; how do groups of people pick which one to focus on?

One way is via coordinating mechanisms; a small number of universally recognized markers of promising research topics. The key ideas are that these markers are:

Credible, so that seeing one is taken as a genuine signal that a research topic is promising

Scarce, so that they do not divide a research community among too many different topics

Public, so that everyone knows that everyone knows about the markers

Prizes, honors, and other forms of recognition can play this role (in addition to other roles). Prestigious prizes and honors tend to be prestigious precisely because the research community agrees that they are bestowed on deserving researchers. They also tend to be comparatively rare, and followed by much of the profession. So they satisfy all the conditions.

This isn’t the only goal of prizes and honors in science. But let’s look at some evidence about how well prizes and other honors work at helping steer researchers towards specific research topics.

Howard Hughes Medical Institute Investigators

We can start with two papers by Pierre Azoulay, Toby Stuart, and various co-authors. Each paper looks at the broader impacts of being named a Howard Hughes Medical Institute (HHMI) investigator, a major honor for a mid-career life scientist that comes bundled with several years of relatively no-strings-attached funding. While the award is given to provide resources to talented researchers, it is also a tacit endorsement of their research topics and could be read by others in the field as a sign that further research along that line is worthwhile. We can then see if the topics elevated in this manner go on to receive more research attention by seeing if they start to receive more citations.

In each paper, Azoulay, Stuart, and coauthors focus on the fates of papers published before the HHMI investigatorship has been awarded. That’s because papers written after the appointment might get higher citations for reasons unconnected to the coordinating role of public honors: it could be, for instance, that the increased funding resulted in higher quality papers which resulted in more citations, or that increased prestige allowed the investigator to recruit more talented postdocs, which resulted in higher quality papers and more citations. By restricting our attention to pre-award papers, we don’t have to worry about all that. Among pre-award papers, there are two categories of paper: those written by the (future) HHMI investigator themselves, and those written by their peers working on the same research topic. Azoulay, Stuart, and coauthors look at each separately.

Azoulay, Stuart, and Wang (2014) looks at the fate of papers written by an HHMI investigator before their appointment. The idea is to compare papers that of roughly equal quality, but where in one case the author of the paper gets an HHMI investigatorship and in the other case doesn’t. For each pre-award paper by an HHMI winner, they match it with a set of “control” papers of comparable quality. These controls are published in the same year, in the same journal, with the same number of authors, and the same number of citations at the point when the HHMI investigatorship is awarded. Most importantly, the control paper is also written by a talented life scientist, with the same position (for example, first author or last author, which matters in the life sciences), but who did not win an HHMI investigator position. Instead, this life scientist won an early career prize.

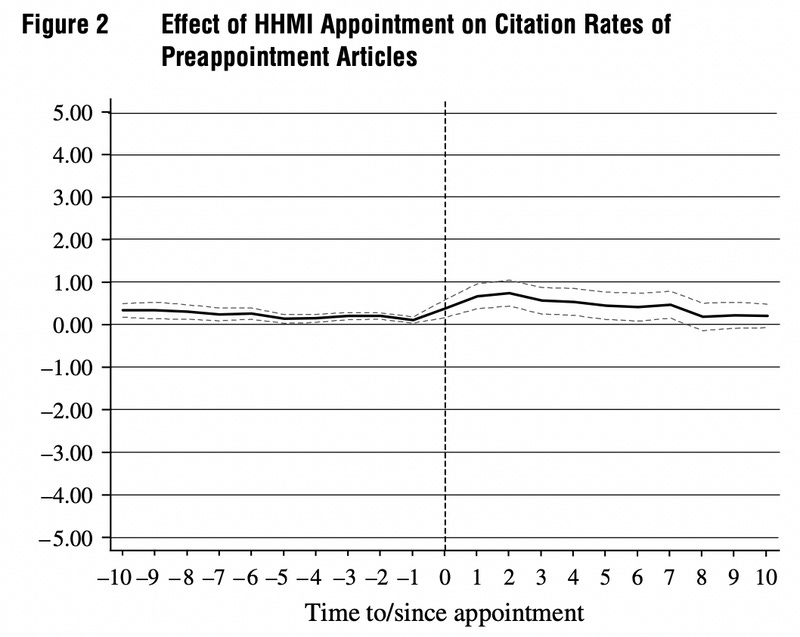

If people decide what to work on and what to cite simply by reading the literature and evaluating its merits, then whatever happens to the author after the article is published shouldn’t be relevant. But that’s not the case. The figure below shows the extra citations, per year, for the articles of future HHMI investigators, relative to their controls who weren’t so lucky. We can see there is no real difference in the ten years leading up to the award, but then after the award a small but persistent nudge up for the articles written by HHMI winners.

That bump could arise for a number of different reasons. We’ll dig into what exactly is going on in a minute. But one possibility is that the HHMI award steered more people to work on topics similar enough to the HHMI winner that it was appropriate to cite their work. A simple way to test this hypothesis is to see if other papers in the same topic also enjoy a citation bump after the topic is “endorsed” by the HHMI, even though the author of these articles didn’t get an HHMI appointment themselves.

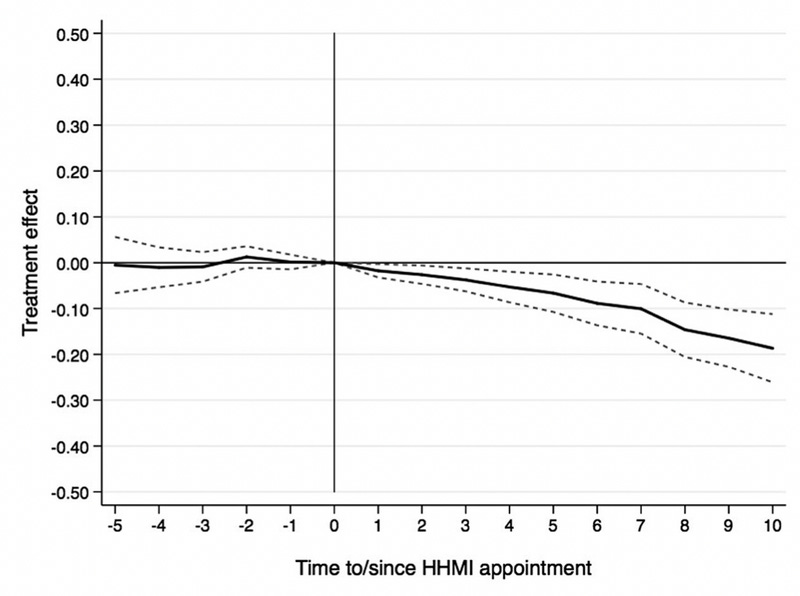

But that’s not what happens! Reschke, Azoulay, and Stuart (2018) looks into the fate of articles written by HHMI losers2 on the same topic as HHMI winners. For each article authored by a future HHMI winner, Reschke, Azoulay, and Stuart use the PubMed Related Articles algorithm to identify articles that are on similar topics. They then compare the citation trajectory of these articles on HHMI-endorsed topics to control articles that belong to a different topic, but were published in the same journal issue. As the figure below shows, in the five years prior to the award, these articles (published in the same journal issue) have the same citation trajectories. But after the HHMI decides someone else’s research on the topic merits an HHMI investigatorship, papers on the same topic fare worse than papers on different topics!

Given the contrasting results, it’s hard not to think that the HHMI award has resulted in a redistribution of scientific credit to the HHMI investigator and away from peers working on the same topic.

So maybe awards don’t actually redirect research effort. Maybe they just shift who gets credit for ideas? The truth seems to be that it’s a bit of both.

To see if both things are going one, we can try to identify cases where the coordination effect of prizes might be expected to be strong, and compare those to cases where we might expect it to be weak. For example, for research topics where there is already a positive consensus on the merit of the topic, prizes might not do much to induce new researchers to enter the field. Everyone already knew the field was good and it may already be crowded by the time HHMI gives an award. In that case, the main impact of a prize might be to give a winner a greater share of the credit in “birthing” the topic. In contrast, for research topics that have been hitherto overlooked, the coordinating effect of a prize should be stronger. In these cases, a prize may prompt outsiders to take a second look at the field, or novice researchers might decide to work on that topic because they think it has a promising future. It’s possible these positive effects are enough so that everyone working on these hitherto overlooked topics benefits, not just the HHMI winner.

Azoulay, Stuart, and coauthors get at this in a few different ways. First, among HHMI winners, the citation premium their earlier work receives is strongest precisely for the work where we would expect the coordinating role of prizes to be more important. It turns out most of the citation premium accrues to more recent work (published the year before getting the HHMI appointment), or more novel work, where novelty is defined as being assigned relatively new biomedical keywords, or relatively unusual combinations of existing ones. HHMI winners also get more citations (after their appointment) for work published in less high-impact journals, or if they are themselves relatively less cited overall at the time of their appointment.

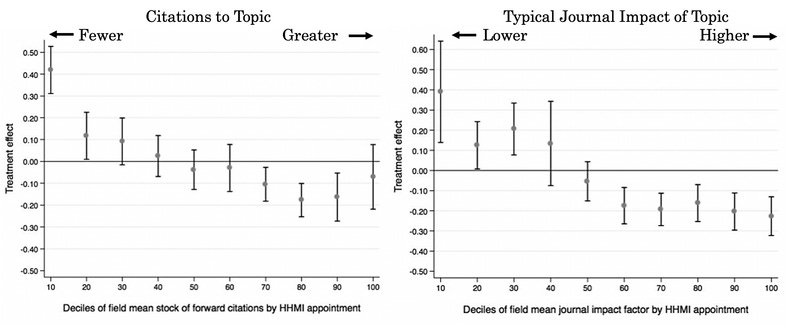

And these effects appear to benefit HHMI losers too. The following two figures plot the citation impact of someone elsegetting an HHMI appointment for work on the same topic. But these figures estimate the effect separately for many different categories of topic. In the left figure below, topics are sorted into ten different categories, based on the number of citation that have collectively been received by papers published in the topic. At left, we have the topics that collectively received the fewest citations, at right the ones that received the most (up until the HHMI appointment). In the right figure below, topics are instead sorted into ten different categories based on the impact factor of the typical journal where the topic is published. At left, topics typically published in journals with a low impact factor (meaning the articles of these journals usually get fewer citations), at right the ones typically published in journals with high impact factors.

The effect of the HHMI award on other people working on the same topic varies substantially across these categories. For topics that have not been well cited at the time of the HHMI appointment, or which do not typically publish well, the impact of the HHMI appointment is actually positive! That is, if you are working on a topic that isn’t getting cited and isn’t placing in good journals, and then someone else working on the same topic wins an HHMI investigatorship, that is good news for your citations. These are precisely the kinds of topics where we might think prizes can usefully direct people’s attention, since they have been so far overlooked. In contrast, for more established topics, where the papers already get citations and publish reasonably well, if someone else working on the topic wins an HHMI award and you don’t, that’s bad news for your citations.

To sum up, prizes have two effects: they pull more research attention to a topic, and they shift more of the credit for that topic to the prize winner. On average, the second effect is the stronger of the two, so that people working on a topic but who are not elevated with an HHMI investigatorship are worse off after the HHMI makes its choice. But for overlooked topics, the coordinating effect of public honors seems to be strong enough to offset this.

Do Citations Measure Research Effort?

If we accept the previous papers, we still might have a few questions about prizes in general. First, both papers looked at a very specific kind of honor - the Howard Hughes Medical Institute investigatorship. Is there something special about that program? Are these results more general? Second, these papers look at citations, and for the purposes of this post, we might prefer more direct measures of research attention. For complementary evidence that addresses both these questions, we can turn to Jin, Ma, and Uzzi (2021), who look at a much broader set of prizes with a much broader set of measures of research attention. The tradeoff is we have less granular detail on what’s going on with any one prize.

Jin, Ma, and Uzzi pull data on 405 different scientific prizes bestowed 2900 times since 1970, and map these to thousands of different research topics3 across many distinct disciplines - far beyond just the life sciences. Analogously to the strategy of Azoulay, Stuart, and coauthors, for every prize-winning topic, they identify five “control” topics that are qualitatively similar, except for the fact that no one working in those topics won a prize.

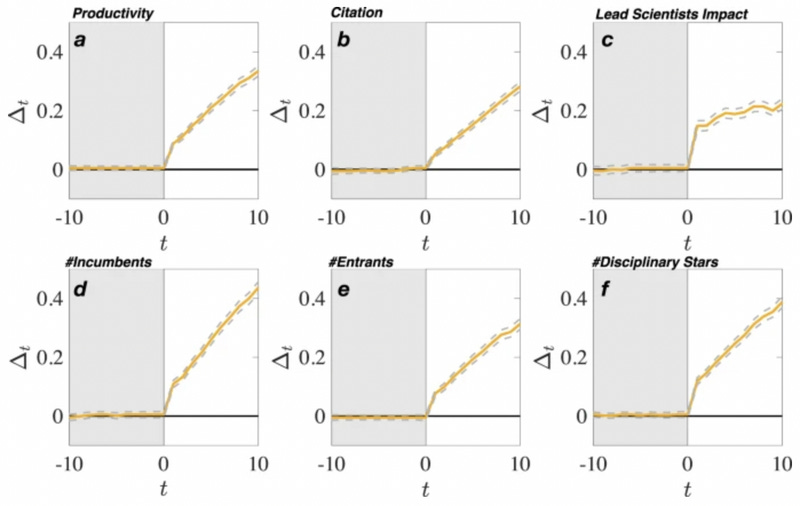

To identify controls, they look for topics that have very similar trajectories to the eventual prize-winner for number of publications, citations, number of scientists, number of new scientists, and other characteristics. The idea is that these non-prizewinning fields show what would have happened to the prize-winning topic in the absence of the prize. The key result is that topics that get prizes go on to get a lot more research attention than those that don’t.

As with Azoulay, Stuart, and coauthors, prize-winning topics tended to garner more citations (though in this case we don’t know how the citations were divided up between the prize recipient and other people active in the field). But Jin, Ma, and Uzzi collect a lot of additional measures that are quite relevant for whether prizes help coordinate research effort. They also look at the number of new articles published in each topic as well as the number of scientists working on it. The latter is broken down into incumbents (who were already publishing on the topic before it received a prize) and entrants (those who were not), as well as the number of the number of elite scientists working in it (elites are defined here as scientists who are the top 5% most cited for the entire discipline).

As the figure below indicates, topics whose scientists get a prize are identical on all these measures to their controls in the decade before a prize is awarded, but then diverge significantly in the decade after. Along every measure of research effort, prize-winning topics see a big boost: 25-55% higher annual effort depending on the measure at the end of the decade. Put another way, in 60% of prize-winning topics, research effort grew fast enough relative to the controls that it is very unlikely the difference arose purely through chance.

Consistent with the idea that prizes are serving to steer research into new topics, Jin, Ma, and Uzzi find the growth of fields is much stronger when prizes are awarded to scientists who only recently began working on a topic (implying the prize is for more recent work, which might have more scope for beneficial follow-on research than older work). The impact of prizes is also larger when they are discipline specific and come with a monetary reward, both of which might increase the award’s reputation among researchers with the capacity to change their research focus. That said, the effect of being a discipline specific award or a moneyed award is a lot weaker than the effect of having an award that focuses on recent, rather than older, research.

Objection! Prizes Aren’t Random

Jin, Ma, and Uzzi find that on average, prizes significantly boost research attention to topics, while Azoulay, Stuart, and coauthors, tend to find on average the effects are muddled (more attention for some, less for others), though prizes are quite good for topics that were unappreciated prior to receiving honors. There are a few possible reasons for the different results of these different papers. One might be that the Howard Hughes Medical Institute honors really are different, but only in the sense that they have an unusually muted effect compared to the average prize!

But another issue might challenge this whole notion that prizes coordinate research. Jin, Ma, and Uzzi take pains to present their work as documenting an unappreciated statistical association between prizes and subsequent growth of fields. At other points, they describe their analysis as testing for a non-causal statistical relationships. They don’t want to say prizes cause growth of research topics.

Because of course, prizes are not randomly bestowed. Instead, they go to scientists who have made a prize-worthy contribution; who have achieved some breakthrough. The trouble is, regardless of whether a breakthrough wins a prize or not, it’s probably easier to do important and interesting work in a topic that has just had a breakthrough. If nothing else, a breakthrough makes it possible to answer questions that have not already been answered. If scientists know about the research of their peers and can independently recognize a breakthrough when it occurs, and if breakthroughs are not so common that coordination is hard, then a topic that gets a breakthrough is also more likely to attract research effort, regardless of whether it wins a prize or not. So do the results above indicate that prizes help coordinate researchers to converge on the right breakthroughs? Or is it merely that breakthroughs attract research attention and, incidentally, prizes?

Each of these papers is aware of this issue, which is why they try to compare topics affected by a prize to controls. If our controls are perfect - if topics really are the same except one gets a prize and the other doesn’t - then this isn’t an issue. But it’s challenging to completely eliminate this concern.

In Jin, Ma, and Uzzi, recall they match each topic to a set of controls that look pretty similar by the numbers, up until the year a prize is given. That should help, especially if the prize-winning breakthrough is a few years old and so shows up in the pre-prize data. Any breakthroughs that occur a few years before a prize is bestowed have time to generate citations and research interest, if they are capable of doing so without a prize. Whether they do or do not attract research attention on their own merits, these kinds of topics can be matched to suitable controls that exhibits the same citation trajectory. In theory, controls could even include topics that had their own breakthroughs, and hence generated their own little bump in research attention, but didn’t get awarded prizes.

But suppose a nascent breakthrough is universally recognized right away, but that attention that takes a year or two to show up in the data in the form of new publications, citations, and scientists working on the topic. Meanwhile, a prize is awarded right away, before these publications, citations, and scientists show up. If we match this topic to another topic that looks similar by the numbers, it won’t actually be a good control. In this case, the prize-winning topic has had a breakthrough that will invariably lead to more research attention, whether or not it gets a prize, and the control topic does not. The prize predicts their divergence but does not cause it.

Reschke, Azoulay, and Stuart (2018) avoids this risk by actually eliminating from their analysis all papers published in the two years prior to the HHMI appointment. That means if any papers describe a breakthrough, these papers have two years to accrue citations, and so they can be matched to suitable controls that exhibit a similar citation profile. This strategy is possible for Reschke, Azoulay, and Stuart because they are focusing on papers associated with prize winners. But Jin, Ma, and Uzzi are matching entire topics, not papers within topics. At the scale they are working with, they cannot pin down the papers most associated with each prize. They do test for unusual growth divergences in the year right before a prize is awarded, and find nothing. Nonetheless, their results seem a lot more likely to include these kinds of cases where a breakthrough discovery generates attention and, incidentally, prizes. Hence their cautious language about the interpretation of their results.

One alternative way to get at this question is to look for a case where honors are bestowed at random, instead of in the wake of a major breakthrough. And we do have one paper that does that.

The Randomness of Life and Death

As Euripides says, “No one can confidently say that he will still be living tomorrow.” Azoulay, Wahlen, and Zuckerman Sivan (2019) looks at a completely different kind of public honor: memorials that occur when scientists die in the midst of an active research career. Of course, memorials are not usually intended to serve as coordination events for research. Nonetheless, they satisfy the conditions highlighted above for coordinating research, since they credibly and publicly highlight a small subset of valuable research. If these memorials incidentally generate an increase in citations to the work of the deceased, that suggests research attention is shifted around by these kinds of public signals, even when they are not precipitated by some recent breakthrough discovery.

Azoulay, Wahlen, and Zuckerman Sivan identify over 10,000 elite life scientists at various stages of their careers (they use a number of criteria to identify elite scientists, such as being highly funded or cited, having many patents, or winning prestigious awards and honors). Of this group, 720 died amid an active research career. For each of the articles written by these deceased scientists, Azoulay, Wahlen and Zuckerman Sivan identify a control article from the same year, that is written by another (living) elite scientist who was the same age as the deceased at the time the article was written. The article should also belong to a different field and not share any coauthors with the deceased, and the elite scientist’s contribution to each paper should be the same (for example, both last author or first author). They can then compare the citations received by articles after a scientists passes away, as compared to control articles whose author still lives.

Indeed, when an elite life scientist passes away they are much more likely to have their work be publicly memorialized then living peers, and their work also sees a 7.4% increase in citations, relative to the papers of peers who live. And this does not appear to be only because of an uptick of articles memorializing the scientist in the years after their death. The effect is just as strong if you look only at citations that begin accruing five years after death.

Moreover, as with HHMI winners, the kinds of articles that we would expect to benefit the most from this kind of coordination event are also the articles that enjoy the largest increase in citations. Articles published in the last three years before the scientist dies enjoy an above average increase in citations, as do the least well known papers of the scientist (those in the bottom 10% of the scientists citations).

Are these citations really indicative of a change in research direction though? It’s tough to say, but there are two points worth noting. First, there is no evidence that the extra citations come exclusively from former coauthors, colleagues, and people operating in fields that are unlikely to be influenced by the research. We might be worried citations of that type are more likely to reflect a desire to remember someone, rather than being the more standard type of citation to relevant academic literature. It is difficult to estimate specific citation patterns across these categories with much precision, but no systematic differences are readily apparent. That’s consistent with a broad-based increase in citations, not an increase clustered around friends and colleagues.

Second, a companion paper to this one, Azoulay, Fons-Rosen, and Graff Zivin (2019) (written about in much more detail here) does find that topics where elite researchers were active also see an increase in entrants to these fields after the elite life scientist passes away. In other words, when a scientist passes away, new people do start publishing work in that field. Moreover, this effect is strongest in topics where a consolidated coauthorship network has not emerged, which is likely the kind of field where coordination events might be most useful. That all said, while this is consistent with the idea that memorial services highlight promising research areas and thereby inadvertently coordinate research, it’s important to note this is not the interpretation the authors give. Azoulay, Fons-Rosen, and Graff Zivin primarily interpret this as being about how dominant researchers in a field can consciously or unconsciously define which questions and approaches merit attention in a given topic, so that when they pass away, new approaches and ideas have space to grow. Still, this paper provides some additional evidence that the increase in citations that follow death and subsequent public memorials reflects some real changes in research are happening.

Can you build a research field with prizes?

So, after all that, on balance I think it looks like prizes can help coordinate a critical mass of scientists to work on a specific topic. Jin, Ma, and Uzzi find a quite strong association between prizes and the subsequent growth of a topic. That growth might be significantly overstated, given the difficulties in isolating the causal power of the prize itself versus the event that merited the prize. But a variety of more micro evidence in the life sciences suggests publicizing research can still play a big role in nudging people to work on a topic, though perhaps mainly in domains that would otherwise be overlooked.

New Things Under the Sun is produced in partnership with the Institute for Progress, a Washington, DC-based think tank. You can learn more about their work by visiting their website.

The articles Gender and what gets researched, How a field fixes itself: the applied turn in economics, Building a new research field all discuss this idea of critical research mass more fully.

Don’t feel bad, I didn’t win an HHMI either.

What’s a “topic”? It’s actually a wikipedia article tagged as being about a scientific topic! There are over 10,000 such articles and the Microsoft Academic Graph has tried to match papers to topics defined in this way.