Prediction Technologies and Innovation

Looking Under the Lamp Post

Announcements:

Gaétan de Rassenfosse has launched a new living literature review, The Patentist, about intellectual property rights. Check it out!

Graduate students and employees at qualifying organizations, consider a summer internship at Open Philanthropy! Applications are due January 12.

The National Bureau of Economic Research is offering research fellowships to study innovation and productivity policies. Applications due January 16.

The Research Transparency and Reproducibility Training (RT2), hosted by the Berkeley Initiative for Transparency in the Social Sciences, will be May 21-23, in Berkeley, CA. Learn more / apply here. Applications due January 19.

The Metascience 2025 conference will be in London over June 30-July 2 and has an open call for proposals until February 7, 2025. I plan to be there!

Apply for a studentship to do a PhD with the London School of Economics and CERN to study the socio-economic impacts of big science - think Large Hadron Collider or International Space Station. Applications will be considered on a rolling basis until May 22.

Email me to suggest an announcement for the next newsletter. On to the post!

This article will be updated as the state of the academic literature evolves; you can read the latest version here. You can listen to this post above, or via most podcast apps here.

[May 2025: One of the papers discussed here has been retracted. See the updated version of the post here.]

Some inventions and discoveries make the inventive process itself more efficient. One such class of invention is the prediction technology. These can take a lot of forms. AI is one example of a technology that can help scientists and inventors make better predictions about what is worth trying as a candidate solution to a problem, but as we’ll see, there are many other kinds of prediction technology as well.

Prediction technologies seem obviously valuable to scientists and inventors: discovery is all about stepping into the unknown, and a good prediction technology can make it more likely the trip into the unknown will be a productive one. But people often worry that they can have a negative effect if they focus too much inventive effort onto the things the prediction technology helps most with.

Streetlights and Rationality

The most famous example of this phenomenon is the proverbial story of the drunk man struggling to find some keys he dropped. It’s night time in the parable, and he’s looking for his keys under the streetlight, but not having any luck. When asked if this is where he dropped them, he says “no, I dropped them over in that field; but this is where the light is.” In the same way, prediction technologies might bias innovators to look where it’s convenient, not necessarily where it’s most important to look.

The parable doesn’t make much sense if you assume the person looking for their keys is more clear-headed though; if they know they dropped their keys far from the light, they should know not to search near the light. Similarly, it’s hard to tell a story where a clear-sighted inventor is worse off by getting access to a prediction technology. Such an inventor can just assess whether it’s worth using the prediction technology, if it means the potential solution won’t be very significant.

Hoelzemann et al. (2024) point out a way that prediction technologies could make us worse off though, even if scientists/inventors are clear-headed and rational. The key idea is that the discoveries made by scientists and inventors tend to be public, and so scientists/inventors learn from each other.1 In such a world, collective innovation happens more quickly if individuals pursue different lines of research. For example, if I see a rival’s line of research fails, then I know not to go down that road myself. If it succeeds, I can copy that approach.

In this kind of process of collective discovery, a prediction technology can actually make us worse off if it leads everyone to focus on the same small number of research lines. Hoelzemann and coauthors first show this with theory; if you don’t care about the knowledge spillovers to your peers, then it can be rational for everyone to follow the same promising research line, rather than trying different approaches (which might seem less promising individually).

Hoelzemann and coauthors also run an experiment where participants must choose an unknown payout from five different options. Participants play two rounds with each payoff, so if someone finds a high payoff in the first round, everyone learns it and can opt to choose that payout in the second round. Hoelzemann and coauthors show that if you don’t tell participants anything, then they will tend to all guess at different payouts in the first stage, which helps them learn which payouts are best in the second round. But if you tell participants about an intermediate payout - not the best, but not the worst - then many participants are more likely to simply select that payout (which is for sure not bad, even if it’s not great). Since more people pick the same thing, they find the group is less likely to discover the best payout and hence less likely to receive the best payout in the second round. Indeed, in the experiment, participants are usually worse off with information about an intermediate payout than when they don’t know anything at all.

This is a nice demonstration of a theoretical downside to prediction technologies. Let’s now turn to some studies of how real-world prediction technologies affected innovation.

Structural Biology in 2003

To start, let’s consider Kim (2023) who looks at the impact of a new software program in structural biology. This is a field where scientists typically try to figure out the 3D structure of proteins by interpreting data from x-ray diffraction patterns. Kim studies the 2003 introduction of a software program called Phaser that was useful in inferring the 3D structure of proteins.

A key limitation of Phaser is that it only helps you find the structure of proteins that are closely related to proteins whose structure we already know. If there are no known structures “nearby” in the space of possible proteins, the program isn’t helpful. But maybe those are precisely the proteins we want to know more about?

Kim looks at how the research focus of structural biologists changed after Phaser was introduced. To do that, she partitions the universe of proteins (both those with known and unknown structures) into tens of thousands of clusters, where all the proteins in a given cluster have similar amino acid sequences (and hence, are more likely to have similar structures).2 She then restricts her sample of clusters to those where the proteins were known to exist before 1998 (when her sample begins), and which contain at least one human protein. In some of these clusters, we additionally knew the structure of some of their proteins by 1998, and in others we did not. Kim looks to see how scientific interest in proteins in these different kinds of clusters changed after Phaser came along and made it easier to discover proteins in clusters where we already knew the structure of some other proteins in the cluster.

She finds that clusters with known structures saw a 7% increase in the number of solved structures, relative to clusters without known structures, following the introduction of Phaser. In other words, this prediction tool did disproportionately increase effort on proteins that were “under the street light.” Worryingly, she also finds some evidence that the program increases work on proteins that are less important. Clusters with known structures see relatively larger increases in the number of protein structures that are solved but which don’t get published in academic journals, don’t get cited by patents, and don’t seem to merit annotation about their functions from experts in another database. Kim takes these as proxy evidence for a relative increase in work on less important protein structures.

Gene-Disease Association Maps

Tranchero (2023) and Kao (2023) also document the strong differential effects of prediction technologies on innovation, this time in the context of drug discovery. Both examine the impact of genome mapping studies that establish correlations between diseases and mutations in certain genes (i.e., “people with this disease tend to have mutations in this gene”). Both look at how clinical trials focused on specific diseases and genes evolve following genome mapping studies; Kao focuses on cancers, and Tranchero on disease more generally.

A key part of both datasets is built from genome mapping efforts. Kao looks at 168 systematic cancer mapping efforts published in prestigious journals. These studies were cancer-specific; for example, a study might sequence a set of ovarian cancer tumors to identify all genes that tend to exhibit mutations in ovarian cancer cells. Tranchero’s study is based on 1,259 genome-wide association studies, which try to identify the genes that disproportionately exhibit mutations in people with a given disease, compared to people without it. Drug companies benefit from knowing about these associations, because they can develop drugs that target different genetic targets.

Both Kao and Trancheo then look to see how innovation is affected for treatments that target a specific gene, to treat a specific disease. In the figure below, we’re focusing either on the number of phase II clinical trials associated with a particular gene-cancer pair (Kao 2023, left) or the number of patent applications associated with drugs for a particular gene-disease pair (Tranchero 2023, right). Both figures compare the average number of clinical trials/patent applications for a gene-disease pair that eventually has an association discovered (at time zero in the figure) to the average number of clinical trials/patent applications for gene-disease pairs that do not have a discovered association. We can see that after associations are discovered, there’s a relative increase in innovation efforts based on that gene-disease combination.

Once again, we can ask whether this prediction technology pulls innovators away from important lines of research.

One problem with genome association studies is that sometimes they misidentify chance associations as meaningful associations. Tranchero has an interesting way to identify this: sometimes a small study identifies an association between a gene and a disease, but then a bigger study comes along later and doesn’t find the same association. That suggests the first study just identified a false positive and there is no actual meaningful relationship between the gene and a disease. This happens surprisingly often - Tranchero finds that about 85% of disease-gene associations do not replicate in subsequent studies. Tranchero uses this fact to identify a bunch of gene-disease associations that subsequently are revealed to be likely false positives. He shows the number of patent applications associated with a gene-disease pair does increase significantly, even if the pair is subsequently revealed to be a false positive. Patent applications based on false positives do not tend to generate valuable patents by a variety of metrics: they’re not highly cited, and they’re not associated with above-average patent value.

It’s not all bad though. While a false disease-gene association increases related patent applications by about 0.07-0.15 per year, a true association increases applications by substantially more (0.5-0.7 per year), and is associated with more valuable subsequent patents. Meanwhile, Kao finds that clinical trials initiated after cancer-gene associations are discovered are a bit more likely to generate positive outcomes (for example, a statistically significant increase in survival probability).

Material Science Machine Learning

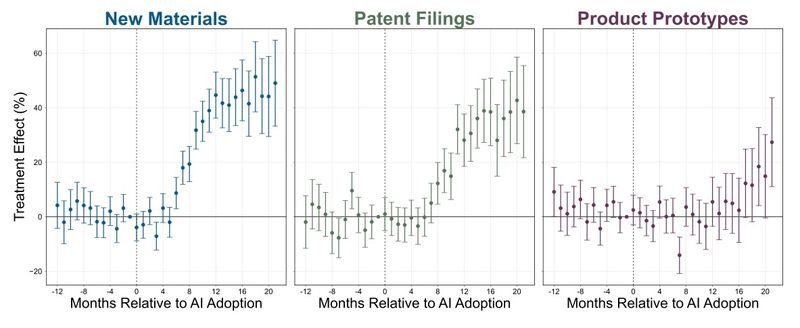

As a final prediction technology, let’s consider machine learning. Toner-Rodgers (2024) looks at a large materials science company, which in mid-2022 began rolling out access to an AI tool to randomly selected research teams. This tool allowed researchers to specify desirable properties for new compounds, and predicted which kinds of compounds might have those properties. Toner-Rodgers can measure the impact of this tool on innovation in a few ways. First, the company has an internal database of materials it believes are viable for product use; new additions to this database are one way he tracks discoveries. Second, he can look at patent filings (see this post for a discussion of how well patents do and do not track innovation outcomes). Third, he can look at new products sold by the company that incorporate newly discovered materials. Below, we can see how the rate of discovery across these different measures changes when teams get access to the AI tool, as compared to teams that lack access.

These are pretty gigantic effects; teams with an AI tool are discovering 20-50% more new materials than their peers without access to this prediction technology!

To look at the kinds of new materials developed, Toner-Rodgers uses a few different approaches. First, he uses a measure of how similar the crystal structure of a new material is to the structures in existing datasets.3 Second, he looks at the text of filed patents. He assumes patents with language that is more dissimilar to existing patents, or which introduce a large share of new technical terms are more novel (an approach used in other literatures). Third, he looks at the share of new materials that are also new product lines, rather than improvements to existing ones (which most new materials are). And finally - he just surveys the researchers to ask whether they think AI helps them make more novel materials. Across each of these measures, he finds teams that have access to the AI tool produce more novel materials than teams that didn’t. For instance, 73% of surveyed respondents thought the tool generated more novel designs than other methods.

The size of these effects was meaningful, if not revolutionary. To take one illustrative example, imagine you line up patents by how similar they are to existing patents (based on using similar uncommon words). Designate the far left 0%, where you have the patents that are most unlike existing patents, in their word choices, and designate the far right 100%, where you have the patents that are the most similar to existing patents, again in terms of word choices. On average, we would put the patents of teams using AI at the 42% position, and the teams not using AI at 48%.

Taken together then, access to an AI tool has a pretty dramatic effect on the number of new materials discovered, and appears to direct research effort towards materials that are more, rather than less, distinctive, at least as compared to previous materials.

Streetlights and Floodlights

To sum up, we’ve got four studies of prediction technologies (software in structural biology, genome-disease maps, and an AI tool for material science), all of which study the effects of prediction technologies on innovation in slightly different ways. None of these studies look directly at the kind of herding concerns that we kicked off this post with. But at least some of them do show that prediction technologies can certainly affect research choices in a way that could lead to a net decline in the diversity of research approaches. The phaser software program and genome-disease association maps both lead to significant increases in research of the kind the prediction technology makes more predictable. And in both cases, it’s also plausible that the increased research action was of the mediocre type that Hoelzemann et al. (2024) warns could lead to an overall decline in the rate of collective invention. The proteins that became more common to study seem less valuable, and a large share of the disease-gene associations that were investigated are probably false positives. The results for AI have a quite different flavor, but we’ll come to those in a minute.

But before we do that, it’s worth pausing for a second to emphasize that the potential downsides of streetlight effects or the narrowing of research approaches aren’t unique to prediction technologies. That’s because prediction technologies are not the only thing that helps us predict which lines of research are likely to be most promising. Another thing that does that is knowledge, in general. Indeed, I have another post, Science as a map of unfamiliar terrain, that uses a prediction technology - a map - as a metaphor for understanding the impact of science on innovation.

Hoelzemann et al. (2024) is the paper we kicked off with, that used theory and an experiment to highlight how knowledge could lower the rate of collective invention. They close their paper with a real world illustration of how the streetlight effect can happen simply as a consequence of normal research. In this case, they focus on research to uncover the genetic roots of different human diseases. They aim to show that when there is an early discovery of a gene weakly related to a particular disease, that leads to less exploration than if no gene had been found at all, which ultimately delays the discovery of genes strongly related to a given disease. To do that, they look at over 4,000 diseases listed in the DisGeNET dataset, which compiles all scientific publications that link human diseases to their genetic causes. DisGeNET scores the strength of an association between a gene and a disease, so over the period 1980-2019, Hoelzemann and coauthors look to see what is the strongest gene-disease association discovered in the first 10% of papers published about a disease.

In the figure below, they look at the average number new genes added to the dataset, per paper, before and after a disease-gene association of medium strength is discovered,4 as compared to other diseases. After year 0, which is when a medium-strength gene-disease association is found, the number of new genes studied (per paper) drops, consistent with a narrowing of research strategies after scientists see that one approach is at least somewhat promising.

Hoelzemann and coauthors argue that this reduction in exploratory research can slow collective innovation. And indeed, they find diseases which discover a gene-disease association of moderate strength actually take longer to discover very strong gene-disease associations than diseases that find only weak gene-disease associations. In their dataset, a disease which initially discovers only a weak gene-disease association will typically discover a strong gene-disease association two years earlier than a disease which initially discovers a medium strength gene-disease association!

So it’s actually anything that might make some research lines disproportionately more attractive that can lead to inefficient research herding: prediction technologies yes, but also discoveries, and presumably other things like changing costs of some kinds of research relative to others. The pressure to reduce exploratory research when a field makes progress is probably just another unfortunate property of the natural world, like Winter. You can’t stop winter, and you can’t stop the fact that discovery and progress make some research lines more attractive and hence broad-based exploration less attractive. Instead, the solution here seems to be setting up incentives that counteract these tendencies by rewarding exploration directly; one way to do that is to reward novelty itself, for example by giving disproportionate credit to people who are first to make a discovery. We in fact do this, though that has its own drawbacks (see the post Publish-or-perish and the quality of science).

Alternatively, you can “just” invent prediction technologies that cover a very broad range of research lines. There’s an interesting contrast between Kim (2023) and Toner-Rodgers (2024). Both papers are about using software to predict molecular structure. However, Kim (2023) finds access to a prediction technology reduced the novelty of scientist efforts - more protein structures got published that were similar to existing proteins. Toner-Rodgers (2024) instead finds access to a prediction technology increased the novelty of research efforts - more materials were created that were dissimilar to existing materials. But I don’t think there’s really any contradiction here. People flock to the light offered by these prediction technologies, but different technologies throw out different amounts of light. We can imagine Kim (2023)’s technology is like a lonely streetlight, only illuminating protein structures that are near to others we already know, while Toner-Rodgers’ technology is a gigantic set of floodlights that illuminate a whole field.5

Thanks for reading! As always, if you want to chat about this post or innovation in generally, let’s grab a virtual coffee. Send me an email at matt@newthingsunderthesun.com and we’ll put something in the calendar.

If you want to read more, the following posts were mentioned above:

See the post Knowledge spillovers are a big deal for more on this point.

If your microbiology is a bit rusty, recall that proteins are built from chains of linked amino acids. Often we know which amino acid is each link in the chain, but that doesn’t tell us how the chain will fold up into its final 3D structure. These structures are important for understanding the function of proteins in the cell.

This approach for comparing structural similarity of crystal structures has been criticized, e.g., here. So I think it’s useful that we have some other measures to look at as well.

A “medium strength” association is defined by them as one whose DisGeNET association score is between the 60th and 90th percentile, where 100% is the strongest association in the dataset.

That said; it’s also possible that the AI tool Toner-Rodgers studies actually induces a narrowing of research strategies, but the research strategies it encourages are sufficiently distinct from what humans have done that they show up as novel. If that’s the case, we’ll eventually see a drop in the novelty of people using the tool. Time will tell, research is hard.