Announcements:

The NBER is again running an Innovation Research Boot Camp this summer, with space for 25 PhD students (or recent PhD graduates). Apply by February 6. More here.

The Washington Center for Equitable Growth is requesting proposals for policy-relevant research that promotes competition and supports workers in an era of AI innovation. Applications due February 10. More here.

Email me to suggest an announcement for the next newsletter. On to the post!

This article will be updated as the state of the academic literature evolves; you can read the latest version here. You can listen to this post above, or via most podcast apps here.

[May 2025: One of the articles discussed here has been retracted. See the updated article here.]

Which kind of inventor (or scientist) is going to benefit more from artificial intelligence: novices or experts? In theory, it can go either way.

Here’s a simple model. Assume you need to solve a bunch of sub-problems to discover or invent something. Novices aren’t very good at solving any sub-problems, while experts are good at solving the sub-problems related to their expertise. Finally, we’ll assume AI can help solve some of these sub-problems.

Suppose AI is good at solving the same sub-problems that experts are good at. In that case, AI doesn’t help experts very much, since it just helps them do what they already knew how to do. But novices using AI might become just as effective as experts. In this case, novices benefit much more from AI than experts, since they now operate on a level playing field with them.

Alternatively, suppose AI is good at solving sub-problems that experts are not good at. In that case, both novices and experts benefit, but experts might benefit a lot more. In this scenario, the experts use the AI to help solve sub-problems they are bad at, and continue using their own expertise to solve the things AI is bad at. Novices can also benefit from AI, but continue to struggle on the sub-problems that AI is bad at. It’s possible the gap between them and experts actually widens.

There are all sorts of middle cases as well, where AI helps solve some of the sub-problems experts are good at, but maybe not all of them. In that case, who benefits most may depend on how important the remaining problems are.

AI is not the only kind of tool people use to help them solve sub-problems they are facing. But the same logic applies to other “prediction technologies” as well.

Literal Maps

Let’s start with Nagaraj (2022), which illustrates the above in unusually concrete terms. We’ll be focusing on mining companies that are trying to find new deposits of gold (itself a common metaphor for the inventive process!). A key prediction technology for this activity is the aerial photo, since overhead photos help mining companies predict where gold deposits will be by revealing associated geological features, such as faults and lineaments. It had long been possible to acquire images like this by paying for a plane to survey some land, but it was costly. Then, beginning in 1972 the Landsat program began to systematically photograph the Earth from space and make these images available through mail-order for a modest fee. Nagaraj looks at how the sudden availability of satellite imagery affected gold-mining by new firms (novices, in the context of this post), and by incumbents (the “experts”).

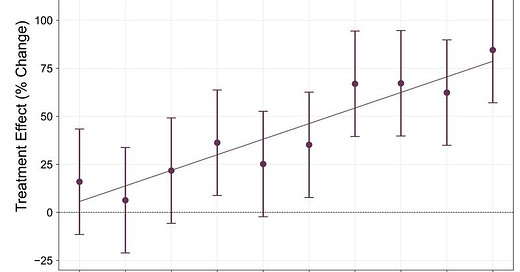

Satellite mapping began in 1972 and continued for the next decade, which means some areas got mapped before others. Who got mapped when doesn’t seem to have been driven by gold potential; there was also some randomness in how useful the maps were based on the extent of cloud cover when an image was taken. Nagaraj’s main analysis compares the annual number of new gold discoveries on a particular tract before and after it was mapped (or mapped when cloud cover was low). The figure below documents the average difference in new gold discoveries made between tracts that receive a low-cloud photo and those that don’t, with the low-cloud image becoming available at year 0. We can see that prior to the availability of a good image, there’s no systematic difference in the rates of new gold discoveries between different tracks, and then after some tracts get good images they start finding gold deposits at a higher rate than the ones that lack a good image (though it takes several years to see an effect).

So who benefits more from satellite maps: the novices or the experts? As we noted at the outset, it should depend on whether there are important sub-problems associated with making a gold discovery that expertise helps with, and which maps do not help with.

Nagaraj focuses on the sub-problems of gold mining associated with institutional and regulatory barriers: environmental regulations, labor regulations, legal institutions, etc. These are the kinds of barriers that we would expect incumbent firms to have some experience dealing with, but for which satellite imagery doesn’t help much. Accordingly, we might expect that access to good satellite images helps novices with discoveries when these other barriers are low, but to help experts when they are high. And that’s basically what Nagaraj finds.

To measure the strength of these other sub-problems, Nagaraj relies on a 2014 survey of 4200 industry managers on the costs of exploration arising from these kinds of institutional and regulatory barriers. He labels the 25% of land tracts belonging to the lowest cost territories as “low cost”, the middle 50% of land tracts as “medium cost”, and the top 25% as “high cost.”

In all cases, senior firms (our experts) benefit from mapping. Across all three types of land tract (low, medium, and high cost) they make more discoveries in a tract after it has been mapped, as compared to unmapped tracts. That said, the benefits of mapping shrink as other costs rise - they see the largest increase in discoveries for the low cost areas, and the smallest increase in discoveries in the high cost areas.

On the other hand, Junior firms (our novices) only benefit from mapping when costs are low or medium. Among high cost land tracts, satellite mapping does not seem to help these novice firms at all. So on high cost land tracts, this prediction technology widens the gap between experts and novices. On the low and medium cost lands though, things are a bit more nuanced. When a region gets mapped, the absolute number of new discoveries made by senior firms increases by more than the absolute number of new discoveries made by junior firms. But junior firms make fewer discoveries overall, so that in proportional terms, they benefit more. The net effect is that the share of discoveries made by novices rises after mapping, concentrated in low and medium cost land tracts.

Metaphorical Maps

We find similar results when we turn from literal maps to metaphorical ones. Kao (2023) and Tranchero (2023) both examine the impact of genome mapping studies on innovation in drug discovery. Genome mapping studies scan the genes of people with specific diseases to identify all gene mutations that turn up more often than would be expected by chance. Since many drugs work by targeting specific genes that are causally related to diseases, identifying genes strongly associated with specific conditions helps drug companies prioritize potential drug targets and predict which drug candidates are more likely to succeed.

Kao (2023) looks at 168 systematic cancer mapping efforts published in prestigious journals. Each of these studies focused on a particular type of cancer; for example, a study might sequence a set of ovarian cancer tumors to identify all genes that tend to exhibit mutations in ovarian cancer cells. Tranchero’s study is instead focused on all diseases, and his genome-mapping data is based on 1,259 genome-wide association studies, which try to identify the genes that disproportionately exhibit mutations in people with a given disease. As discussed in Prediction Technologies and Innovation, both studies find that innovative effort around specific gene-disease pairs increases after associations between a disease and gene are discovered (relative to the level of innovative effort around gene-disease pairs with no associations found). Kao measures innovative effort with the number of phase II clinical trials focusing on a particular disease and gene, while Tranchero measures innovative effort with the number of new patent applications associated with a particular disease and gene.

Mapping studies provide one very particular type of information: they tell you that there is an unusually strong statistical association between a gene mutation and a drug. That’s useful to know, but it can sometimes mislead, because it turns out that strong associations between a gene and a disease are often spurious and not meaningful. Tranchero has an interesting way to identify this: sometimes a small study identifies an association between a gene and a disease, but then a bigger study comes along later and doesn’t find the same association. That suggests the first study just identified a false positive and there is no actual causal relationship between the gene and a disease. This happens surprisingly often - Tranchero finds that about 85% of disease-gene associations do not replicate in subsequent studies. So while a statistical association between a gene and a disease is suggestive, an ability to discern which associations make sense and are most likely to represent true causal relationships is a valuable complement to what genome mapping studies tell you.

It isn’t obvious which associations are meaningful and which are spurious. As noted in Prediction Technologies and Innovation, firms often seem to get tricked by these false positives; there is an increase in patent applications for gene-disease pairs when an association is found, even if that association later turns out to be spurious (by Tranchero’s definition). However, Tranchero also shows that firms who have some expertise related to the gene don’t get fooled by these false positives. To measure that, he looks at the publications associated with firms and labels firms who have published articles related to a particular gene to have “gene knowledge.” We can imagine that these firms have a better understanding of the role a gene might play, and hence have a better ability to discern meaningful and spurious associations. And it turns out these firms are more likely to file patent applications when a genome wide association study finds an association that is not subsequently overturned, but they are not more likely to file applications for associations that will turn out to be spurious.

Drug discovery requires solving many other sub-problems besides deciding if there is a link between a gene and a disease. For example, running clinical trials involves solving a lot of logistical and other sub-problems. Moreover, the kinds of challenges faced vary across different kinds of trials, and firms will have expertise related to running different kinds of trials. Firms whose expertise is most closely related to the other problems associated with running a clinical trial on a particular disease-gene pair should benefit more from learning about an association.

Kao has one proxy for this. She finds that after an association between a cancer and a gene is discovered, there is a larger increase in clinical trials for drugs that had been previously tested in similar settings (for example, for other diseases associated with the gene in question) than for totally novel drug applications. That’s consistent with there being a bigger response to mapping data from firms with expertise in the kinds of clinical trials you need to run if you take an association seriously. Again; experts can benefit more from a prediction technology if there are important other sub-problems that the prediction technology doesn’t help with, but expertise does.

In contrast, we can also predict that firms with expertise very closely related to the knowledge disclosed by mapping studies will benefit less from them. That seems to be the case. For example, for each company in her dataset, Kao looks to see if they have previously conducted phase II trials associated with a particular cancer, and if they have published an above-average number of articles about genome mapping. She takes that as a proxy that this firm is more likely to have private mapping information about associations related to that cancer. If private mapping data and public mapping data are close substitutes, this might be a good example of what happens when a prediction technology solves the sub-problem that an expert is good at. And here, rather than help the experts, she finds the firms with more publications about mapping respond to publications about associations between this particular cancer and a gene only about 40% as strongly as firms that are less likely to have private mapping information about the cancer and gene.

Judgement and AI Tools

For a final case study, let’s turn to a paper looking at the impact of AI on discovery in material sciences, circa 2022. Toner-Rodgers (2024) looks at a large materials science company, which in mid-2022 began rolling out access to an AI tool to randomly selected research teams. This tool allowed researchers to specify desirable properties for new compounds, and predicted which kinds of compounds might have those properties. As discussed more in the post Prediction Technologies and Innovation, Toner-Rodgers found teams with access to the tool discovered substantially more new materials, measured in a variety of different ways.

But Toner-Rodgers has data on 1,018 scientists spread across 221 different research teams (this is apparently a very big company). That lets him see which kinds of scientists most benefitted from access to the tool.

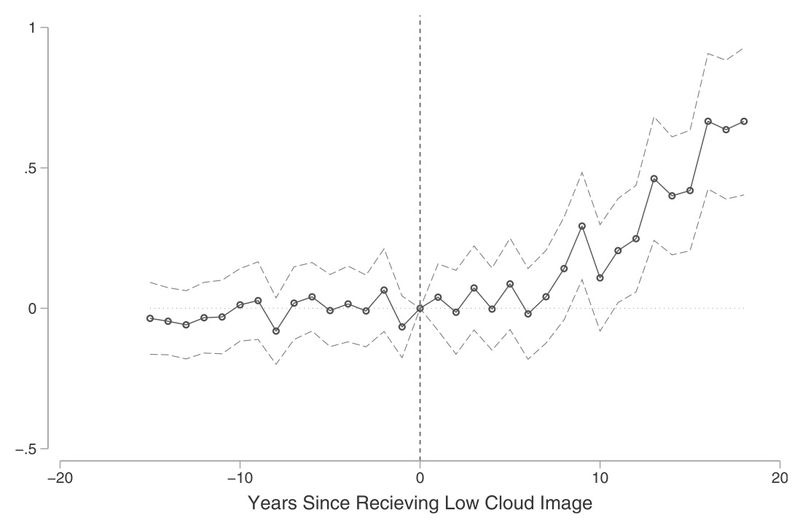

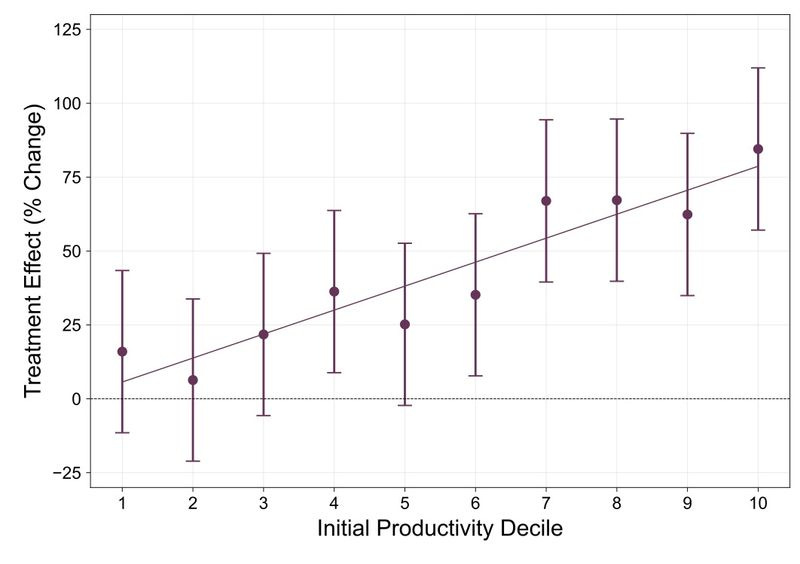

He starts by computing how many new materials each scientist tends to discover per year, before they had access to AI. He groups scientists into percentile buckets (the 10% least productive, then the 10% next most productive, then the 10% next most productive and so on). Then, he compares the number of new materials being discovered by scientists with access to the new AI tool, to the number being discovered by scientists in the same productivity bucket, but who don’t have access to the tool. The results are in the figure below. The horizontal axis is the the productivity decile; lower numbers indicate scientists who produced fewer new materials per year, prior to the introduction of AI.

If we think of the lower-productivity scientists as being analogous to the novices of this post and the higher-productivity scientists as being analogous to experts, then this is a very clear case of an AI tool helping the experts more than the novices. Indeed, we can’t statistically reject the hypothesis that the 30% of scientists with the lowest productivity don’t benefit at all from the AI tool; they do just as well as their similar colleagues without the tool. Meanwhile, scientists who were in the top 30% of productivity prior to the tool’s introduction produce 60-80% more new materials with AI than similar colleagues without it.1

If access to AI widens the gap between “novices” and “experts” (not really the right terms for the above figure, but it’s what we’re using in this post), then the discussion at the beginning of this post suggested that may be because experts are good at solving sub-problems that the AI does not help solve. Fortunately for Toner-Rodgers, scientists at this organization keep logs of their activities, which he has access to. A log will include the time a scientist began working on a task, how long they spent on it, and a text description of the task; scientists make 7.8 entries per week on average. These logs provide unusually detailed information on the kinds of sub-problems that scientists face in this organization.

They aren’t standardized, but Toner-Rodgers uses Claude-3.5 to label work activities with one of four categories: idea generation, evaluation, testing, and “other.” Here, “idea generation” refers to activities related to coming up with designs for novel compounds, “evaluation” refers to activities that help determine which designs to test (for example, by doing initial theoretical calculations or reviewing the literature), and “testing” involves running tests on proposed ideas to assess them for desirable properties.

Toner-Rodgers then runs a statistical model to try and infer each scientists’ skill at the idea generation and evaluation steps. The basic idea here is to use their work logs to figure out on which research projects a scientist was doing work on idea generation and on which projects they were doing work on evaluation (or they might be doing both). Toner-Rodgers assumes the probability a project succeeds (in this case, a new material is ultimately added to the firm’s internal database) is higher when the people assigned to idea generation have a higher skill for that task, and the people assigned to evaluation have a higher skill for that task. For example, suppose we have two hypothetical scientists, Alice and Bob. When Alice works on idea generation and Bob on evaluation, we notice that projects tend to succeed, but when Bob works on idea generation and Alice works on evaluation, projects tend to fail. When Alice and Bob each do both tasks, their success rate is somewhere in the middle. That set of facts lets us infer Alice is good at idea generation, but not evaluation, and Bob is the reverse. Toner-Rodgers runs a statistical model that infers a scientists’ skill using a similar idea.

With these inferred skill levels, he then can ask who benefits most from using an AI tool: people good at idea generation or idea evaluation? The AI tool seems most likely to help at the idea generation stage, since it is designed to propose compounds that are likely to meet certain requirements. So we would predict that people good at idea evaluation benefit more from using the tool than people good at idea generation. And that’s basically what Toner-Rodgers finds: people skilled at each task benefit, but people skilled at idea evaluation enjoy about 4x the benefits of people good at idea generation.

Indeed Toner-Rodgers finds that, over time, the advantage of experts actually grows. This seems to be because after working with the AI tool, scientists begin to reallocate more and more of their time towards idea evaluation tasks, and away from idea generation tasks (see the figure below).

Echoes of Automation

To return to the beginning of our post, we asked which kind of inventor/scientist is more likely to benefit from artificial intelligence: novices or experts?

In theory it could go either way, since it depended on the kinds of sub-problems that AI helped with and whether those overlapped substantially with the kinds of sub-problems that experts have typically had advantages over novices at. So we turn to empirics related to various prediction technologies and find… it can sort of go either way.

In gold-mining, at least in proportional terms, novices benefit more when a prediction technology helps them identify new deposits, so long as other barriers to exploration (regulatory and institutional, for example) are small. Or the prediction technology can disproportionately help experts, so long as those other barriers are high, since expert firms are better at dealing with those barriers. In drug discovery, gene maps erode a lot of the advantages of experts who have private mapping data, but not the advantages of experts who have different kinds of expertise (for example, related to running clinical trials or the genes in question). For materials science researchers, novices in general don’t seem to benefit much from the tool, but there is still some variation in how much it helps different kinds of experts. Experts whose main strength is generating ideas benefit from the tool much less than experts skilled at evaluating ideas.

So in this case I think we have a remarkable agreement of theory and empirics. Who benefits most from a new prediction technology? It depends!2

That’s a bit too nihilistic about what we’ve learned though I think. We have in fact made some progress: the impact of future AI on scientists and inventors will depend on your assumptions about what future AI will do well and how much that overlaps with existing expertise. My own view is that the current AI paradigm is going to keep getting better at identifying useful patterns in data, whether the protein data bank or the corpus of scientific journal articles. So to the extent an expert’s work is primarily based on mining patterns from data, AI is potentially going to erode their advantages over novices. I think this would be bad news for me if I made my living purely from writing New Things Under the Sun!

On the other hand, as your expertise gets further away from pulling patterns out of data, maybe experts will begin to benefit more from AI than novices. Maybe there isn’t a lot of data for the AI to work with - this could be the case in fields where tacit knowledge is important, or maybe on the cutting edge where experiments are generating knowledge but nothing has been written up yet. Alternatively, maybe pulling patterns from data is just a starting point for a lot of other work; in materials science, it suggests compounds, but you still need to test them.

Thanks for reading! As always, if you want to chat about this post or innovation in generally, let’s grab a virtual coffee. Send me an email at matt@newthingsunderthesun.com and we’ll put something in the calendar.

If you want to read more, the following posts were mentioned above:

In this case, innovation is being measured by the number of new materials that the scientists are adding to an internal database of materials for promise in products.

See When the robots take your job for much more discussion of who benefits from automation