In last week’s newsletter, we looked at a thought experiment by Jones and Summers that pretty convincingly argued the average return on a dollar of R&D was really high. That would seem to suggest we should be spending a lot more on R&D.

But the devil is in the details. How, exactly, should you increase your R&D spending? Today, let’s look at one kind of program that seems to work and would be an excellent candidate for more funds: the US’ Small Business Innovation Research (SBIR) program and the European Union’s SME instrument (which was modeled on the SBIR).

Grants for Small Business Innovation

The SBIR and SME instrument programs are competitive grant competitions, where small businesses submit proposals for R&D grants from the public sector. Each program consists of two phases, where the first phase involves significantly less money than the second. For the SBIR program, a phase 1 award is typically up to $150,000 and roughly $1mn in phase 2; for the SME instrument, phase 1 is just €50,000 and phase 2 is €0.5-2.5mn. In the US, firms apply for phase 1 first, and then phase 2, whereas in the EU firms can apply to either straightaway. Broadly speaking, the money is intended to be used for R&D type projects.

They’re pretty competitive. In the US, an application to the SBIR program run by the Department of Energy will typically take a full-time employee 1-2 months to complete and has about a 15% chance of winning a phase 1 award; conditional on winning a phase 1 award, firms have about a 50% chance of winning a phase 2 award (or overall chances about 8%). In the EU, the probability of winning in phase 1 is about 8%, and in phase 2, just 5%.

So, both programs involve the government attempting to pick winning ideas, and then giving the winners money to fund R&D. How well do they work? Does the money generate innovations? Does it get the good return on investment that Jones and Summers’ thought experiment implies should be possible?

Evaluating the Impact of Grants

Two recent papers look at this question using the same method. Howell (2017) looks at the Department of Energy’s SBIR program ($884mn disbursed over 30 years), while Santoleri et al. (2020) looks at the SME instrument program (€1.3bn disbursed over 2014-2017). Each paper has access to details on all applicants to the program, not merely the winners. That means they can follow the trajectory of businesses that apply and get a grant as well to those that apply but fail to get an R&D grant.

But to assess the impact of money on innovation, they can’t just compare the winners to the losers, because the government isn’t randomly allocating the money: it’s actively trying to sniff out the best ideas. That means the winning applicants would probably have done better than the losers, even if they hadn’t received any R&D funds, since someone thought they had a more promising idea. But the way these programs are administered have a few quirks that allow researchers to estimate the causal impact of getting money.

During the period studied, each program held lots of smaller competitions devoted to a specific sector or technology. For example, the Department of Energy might solicit proposals for projects related to Solar Powered Water Desalination. Within each of these competitions, proposals are scored by technical experts and then ranked from best to worst. After this ranking is made overall budgets are drawn up for the competitions (without reference to the applications received), and the best projects get funded until the money runs out. For example, the Department of Energy might receive 11 proposals for it’s solar powered water desalination topic, but (without looking at the quality of the proposals) decide it will only be able to fund 3. The top three each get their $150,000, and the fourth gets nothing.

The important thing is that, for applications right around the cut-off, although there is a big change in the amount of money received, there shouldn’t be a big change in the quality of the proposals (in our example, the difference between third and fourth place shouldn’t be abnormally large). That is, although we don’t have perfect randomisation, we have something pretty close. Proposals on either side of the cutoff for funding differ only a bit in the quality of their proposals, but experience a huge difference in their ability to execute on those proposals because some of them get money and some don’t. It’s a pretty close estimate of the causal impact of getting the money.

What’s the Impact

Each paper looks at a couple measures of impact. A natural place to start when evaluating the impact of small business innovation is patents. In each case, patents are weighted by how many citations they end up receiving (while citations might be a problematic measure of knowledge flows, they seem to be quite good as a way of measuring the value of patents: better patents seem to get more citations). In the text, I’ll just call them patents, but you should think of them as “patents adjusted for quality.” The papers produce some nice figures (Howell left, Santoleri et al. right).

These figures nicely illustrate the way the impact of cash is assessed in these papers. Especially in the left figure, you can see that, as we worried, proposals that are ranked more highly by the SBIR program do tend to get more patents: the SBIR program does have the ability to judge which projects are more likely to succeed. Even looking at projects that don’t get funding (the ones to the left of the red line), Howell’s measure of patenting rises by about 0.2 log points at each rank, as we move from -3 to -2 to -1. If that trend were to continue, we would expect the +1 ranked proposal to jump another 0.2, to something a bit less than 0.8. But instead, it jumps more than twice as much. That’s pretty suggestive that it was the funding that mattered. Estimated more precisely, Howell finds getting one of the SBIR’s phase 1 grants increases patenting by about 30%. Santoleri et al. (2020) get similar results, estimating a 30-40% increase in patenting from getting a phase 2 grant (though note that the phase 2 grants in the EU tend to be a lot larger than the phase 1 US grants).

To the extent we’re happy with patents as a measure of innovation, we’ve already shown that the program successfully manages to buy innovations. But the papers actually document a voluminous set of additional indicators all associated with a healthy and flourishing innovative business (in all cases below, SBIR refers to phase 1 and SME refers to phase 2):

Winning an SBIR grant doubles the probability of getting venture capital funding; winning an SME instrument grant triples the probability of getting private equity funding

Winning an SBIR grant increases annual revenue by $1.3-1.7mn, compared to an average of $2mn

Winning an SME instrument grant increases the growth rate of company assets by 50-100%, the growth rate of employment by 20-30%, and significantly decreases the chances of the firm failing.

Benchmarking Value for Money

OK, so winning money helps firms. Is that surprising? Do we really need scientists to tell us that? In fact, it’s not guaranteed. When Wang, Li, and Furman (2017) apply this methodology to a similar program in China they don’t find the money makes a statistically significant difference. That could be for a lot of reasons (discussed in the paper), but the main point is simply that we can’t take for granted seemingly obvious results like “giving firms money helps them.”

But still, even if we find R&D grants help firms, that doesn’t necessarily imply it’s a good use of funds. We want to know the return on this R&D investment. That’s challenging because although we know the cost of these programs, it’s hard to put a solid monetary value on the benefits that arise from them, which is what we would need to do to calculate a benefits cost ratio.

So let’s take a different tack. One thing we can measure reasonably well is whether firms get a patent. So let’s just see how many patents these programs generate per R&D dollar and compare that to the number of patents per R&D dollar that the private sector generates. If we assume the private sector knows what it’s doing in terms of getting a decent return on R&D investment, then that gives us a benchmark against which we can assess the performance of these government programs.

So how many patents per dollar does the private sector get? If you divide US patent grants (from domestic companies) over 2010-2017 by R&D funded by US businesses in the same year, you pretty consistently get a ratio of around 0.5 patents per million dollars of R&D (details here). That’s about the same ratio as this post finds, looking only at 7 top tech companies.

To be clear, the point isn’t that each patent costs 2 million dollars of R&D. R&D doesn’t just go into patents. This report found in 2008 that only about 20% of companies that did R&D reported a patent. Taking that as a benchmark, suppose that only 20% of inventions get patented; in that case, we could think of this as telling us that every $2mn in R&D generates 5 “innovations” of which one gets patented. As long as SBIR/SME grant recipients have a similar ratio between innovation and patenting as other US R&D performing firms, then looking at patents per R&D for them is an OK benchmark for the productivity of R&D spending.

If that sounds good enough to you, read on! If not, I say a bit more about this in an extra note at the end of this post. Feel free to check that out and then come back here if you’re feeling skeptical.

So do these programs generate patents at a similar rate of 0.5 per million? Yes!

Value for money in the SBIR Program

This isn’t something that Howell (2017) or Santoleri et al. (2020) calculate directly, but you can back out estimates from their results using a method described in the appendix of our next paper, Myers and Lanahan (2021). Myers and Lanahan estimate Howell’s results imply the DOE SBIR program gets about 0.8-1.3 patents per million dollars. Applying their method to the range of estimates in Santoleri et al. (2020) and converting into dollars, you get something in the ballpark of 0.7 patents per million dollars in the SME instrument program (see the extra notes section at the bottom for more on where that number comes from). In either case, that compares pretty favorably with a rough estimate of 0.5 patents per million R&D dollars for the US private sector.

That’s reassuring, but it’s not exactly what we’re interested in. As stated at the outset, Jones and Summers’ thought experiment implies that R&D is a really good investment once you take into account all the social benefits. What we have here is evidence that the SBIR and SME instrument programs can probably match the private sector in terms of figuring out how to wisely spend R&D dollars to purchase innovations. Frankly, that seems plausible to me. It just means governments, working with outside technical experts (that even the private sector might need to turn to) could do about as well as the private sector. But they don’t tell us much about the benefits that accrue from these R&D investments that aren’t captured by the patents the grant recipients get.

But that’s what Myers and Lanahan (2021) is about. What they would like to see is how giving R&D money to different technology sectors leads to more patents in that sector by grant recipients, as well as other impacts on patenting in general. For example, if we give a million dollars to a couple firms working on solar powered water desalination, how many new solar water patents do we get from those grant recipients? What about solar water patents from other people? What about patents that aren’t about solar powered water desalination at all?

Like Howell, they’re going to look at the Department of Energy’s SBIR program. They need to use a different quirk of the SBIR though, because they’re not comparing firms that get funds to firms that don’t; they’re comparing entire technology fields that get more money to fields that get less money.

Instead, they rely on the fact that some US states have programs to match SBIR funding with local funds. Importantly, DOE doesn’t take that into account when deciding how to dole out funds. For example, in 2006, North Carolina began partially matching the funds received by SBIR winners in the state. If a bunch of winning applicants in solar technology happen to reside in, say, North Carolina in 2008 instead of South Carolina in 2008 or North Carolina in 2005, then those recipients get their funds partially matched by the state and solar technology research, as a field, gets an unexpected windfall of R&D dollars. What Myers and Lanahan end up with is something close to random R&D money drops for different kinds of technologies.

Myers and Lanahan use variation in this unexpected “windfall” money to generate estimates of the return on R&D dollars. For this to work, you have to believe there are no systematic differences between SBIR recipients that reside in states with matching programs and those that don’t, and they present some evidence that this is the case.

One more hurdle though. You can crudely measure innovation by counting patents. And, with some difficulty, you can come up with estimates of more-or-less random R&D allocations to different technologies. But if you want to see how the one affects the other, you have to link patents to SBIR technology areas. Myers and Lanahan accomplish this with natural language processing. For every SBIR grant competition, they analyze the text of the competition description and identify the patent technology categories whose patents are textually most similar to this description. When, say, solar technology gets a big windfall of R&D money, they can see what happens to the number of patents in the patent technology categories that historically have been textually closest to the DOE’s description of what it was looking to fund. And this is also how they measure the broader impact of SBIR money on other technologies. When solar gets a big windfall, what happens to the number of patents in technology categories that are not solar, but are kind of “close” to solar technology (as measured by text similarity)?

OK! So that’s what they do. What do they find? More money for a technology means more patents!

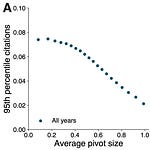

In the above figure, each dot is a patent technology group and compares the funding received by that technology to subsequent patenting. The figure looks at the patents of DOE SBIR recipients only and nicely illustrates the importance of doing the extra work of trying to estimate “windfall” funding. The steep dotted green line is what you get if you just tally up all the funding the SBIR program gives to different technologies - it looks like a little more funding gives you a lot more patents. But this is biased by the fact that the technologies that get the most money were already promising (that’s why they got money!). The flatter dark blue line is the relationship between quasi-random windfall money and patenting. It’s still the case that more money gets you more patents, but the relationship isn’t as strong as the green one. But this is the more informative estimate on the actual impact of cash.

Using estimates based on windfall funding, an extra million dollars is associated with SBIR recipients being granted about 0.5 additional patents. Which is pretty typical (or so I’ve argued here). But the more important finding is that’s only a fraction of the overall benefit. When there’s more R&D in a given technology sector, we typically think that creates new opportunities for R&D from other firms, because they can learn from the discoveries made by the grant recipient. Indeed, other papers have found spillovers are often just as important, or even more important, than the direct benefits to the R&D performer.

Myers and Lanahan get at this in two ways. First, they look for an impact of R&D funding not only on the patent technology classes that are closest to SBIR’s description of the funding competition, but also ones that are more textually distant. Typically, a bigger share of extra patent activity comes from classes that are not the closest fields, but still closer than a random patent (consistent with other work). Second, they look at patents held by people who are not SBIR recipients themselves, but who live closer or farther away from SBIR recipients.

So, looking only at SBIR recipients, an extra million tends to produce an extra 0.5 patents. Looking at patents belonging to anyone in the same county as an SBIR recipient - a group for whom we might assume is likely to contain people with similar technical expertise and possibly overlapping social and professional networks - an extra million tends to produce an extra 1.4 patents (across a wide range of technology fields). And looking at all US patents (from inventors residing anywhere in the world), an extra million tends to produce an extra 3 patents.

If all those patents are equally valuable, that would imply when the SBIR gives out money, the innovation outputs created by recipients are only a small part of the overall effect (0.5 of 3 total patents). Of course, all those patents are not, in fact, equally valuable. The ones created by the grant recipients tend to be more highly cited than the ones that we’re attributing to knowledge spillovers. Still, Myers and Lanahan estimate that after adjusting for the quality of patents, half the value generated by an SBIR grant is reflected in the patents of non-recipients working on different (but not too different) technology.

Prospects for Scaling Up

Whew! That’s a lot. To sum up: we’ve got some good theoretical reasons to think the return on R&D is very high, on average. If we look at a specific R&D program that gives R&D grants to small firms, the grants are effective at funding innovation at about the same level as the private sector could manage. And if we try to assess the broader impact of that funding, we find including all the social benefits gives us a return at least twice as high as the ones we got by focusing just on the grant recipients; and those were already decent! All together, more evidence that we ought to be spending more on R&D.

Lastly, we have good reason to think these effects can also be maintained if we scale up these programs. The design of Howell (2017) and Santoleri et al. (2020) is premised on estimating the impact of R&D funding on firms right around the cut-off. For the purposes of scaling up, that’s great news, because if we increased funding the firms that would get extra money would be ones that are closest to the cut-off.

If you liked this post, you might also like:

More science leads to more innovation (the link between the production of science and technological innovation)

Free knowledge and innovation (another innovation policy that works: disseminating knowledge freely)

Importing knowledge (and another innovation policy that works: immigration)

Extra credit

One reason patents per R&D dollar might be a bad benchmark in this case is if we think small firms like the ones getting R&D grants are more likely to seek patents than the typical R&D performing firm. There are some good reasons to think that’s the case: basically, they’re small but they aim to grow on the back of their technologies and so they need all the protection they can get. But looking at Howell (2017) and Santoleri et al. (2020) finds the median firm in these programs still has zero patents (even after winning an award). If just 20% of firms that do R&D also have a patent, then these grant recipients can’t be much more than twice as likely to get patents as everyone else. Alternatively, if you compare patents per dollar of small firms to large ones for the USA, they don’t look that different in aggregate. Nonetheless, I fully concede R&D is certainly a noisey predictor of patents; but I’m out of other ideas.

Santoleri et al. (2020) find the mean patents per firm is 4 among phase II applicants, and that getting a phase II grant increases cite-weighted patenting by 15-40%. That implies between 0.15x4 = 0.6 and 0.4 x 4 = 1.6 patents for every €0.5-2.5mn, or 0.2-3.2 patents per million euros or 0.2-2.9 patents per million dollars. Using the midpoint of each you get 0.7 patents per million dollars