This post was jointly written by me and Caroline Fry, assistant professor at the University of Hawai’i at Manoa! Learn more about my collaboration policy here.

This article will be updated as the state of the academic literature evolves; you can read the latest version here. You can listen to this post above, or via most podcast apps here.

According to most conventional measures of scientific output, the majority of global research takes place in a handful of countries. In the figure below, we pulled data on three measures of R&D efforts across every country in the world: number of scientific/technical articles published by researchers in a country, number of researchers engaged in R&D in a country, and R&D spending by country. We then combined that data with information on the population of every country to create the following chart, which shows the share of R&D occurring in countries with some share of the earth’s population.

According to this data, countries with about 12% of the world’s people produce half the world’s research. On the other side of the coin, half the world’s population resides in countries that collectively produce about 9% of scientific articles. The ratios are even more skewed if we rely on data on R&D spending or the number of researchers. Put differently, much of the world’s population lives in countries in which little research happens.

Is this a problem? According to classical economic models of the “ideas production function,” ideas are universal; ideas developed in one place are applicable everywhere. If this is true, then where research takes place shouldn’t be a problem. Indeed, if research benefits from clustering, we would actually prefer to concentrate our research communities into a small number of places.1

This is probably true enough for some contexts. But there are at least two problems here. First, as has been well established in the literature on technology diffusion, there are significant frictions associated with the diffusion of knowledge over geographic distances.2 Second, and what we plan to discuss in this post, research may be less useful in countries where it did not occur – or, nearly as consequential, people may believe this to be so. In this post we’ll look at four domains - agriculture, health, the behavioral sciences, and program evaluation research - where new discoveries do not seem to have universal application across all geographies.3

Different places, different problems

In a previous post we discussed some evidence that researchers tend to focus on problems in their local area. To the extent that the prevalence of problems varies around the world, this could mean that the distribution of researchers influences the levels of research to solve problems in some locations (irrespective of diffusion). If the problems of places with few researchers differ from the problems of places with many, then some problems will be under-researched if researchers focus on what’s happening locally. So the first important question is: do problems vary around the world?

Of course they do.

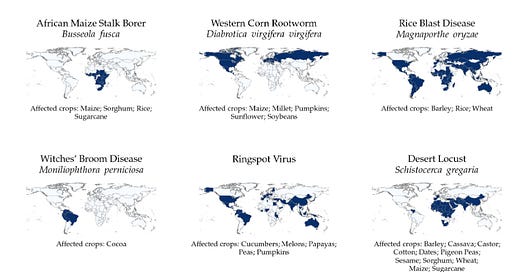

We can start with Moscona and Sastry (2022), which documents that the prevalence of crops and pests varies around the world. In a first step of their analysis, they use a dataset on international crop pests and pathogens from the Centre for Agriculture and Bioscience International to map the prevalence of crop pests or pathogens around the world, documenting significant variation in where crops and their associated pests and pathogens tend to be found.

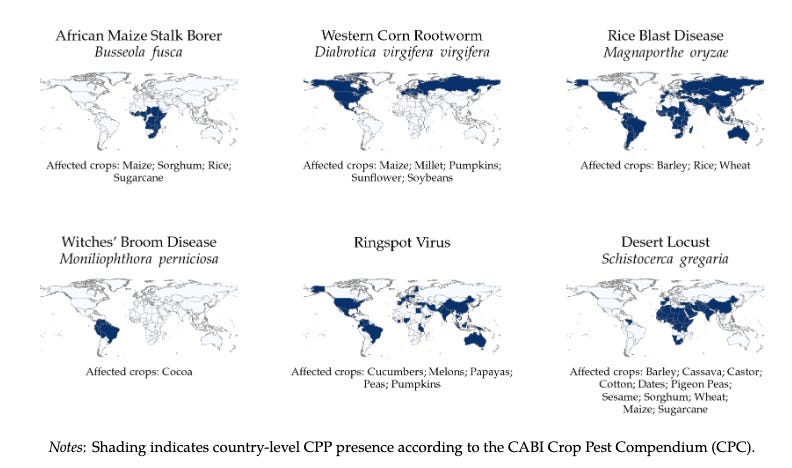

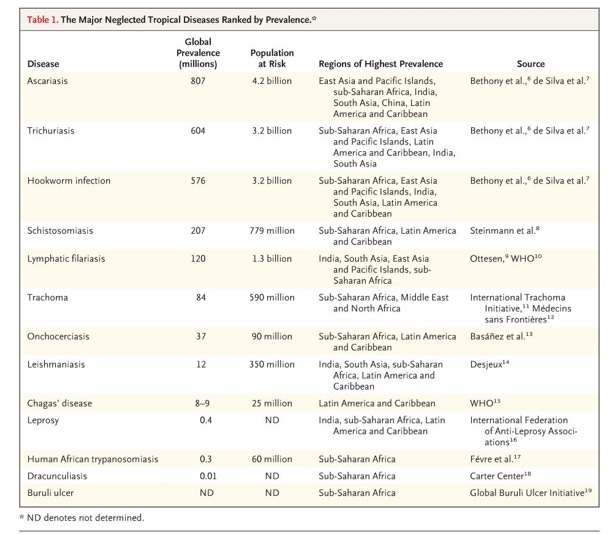

Similarly, it is well documented that diseases also vary around the world, due to variations in animal hosts, local climates, demographics, and socioeconomic conditions (see Wilson 2017 for a review).4 For example, the 13 parasitic and bacterial infections that make up ‘neglected tropical diseases’ primarily occur in low-income countries in sub-Saharan Africa, Asia and Latin America (Hotez et al. 2007).

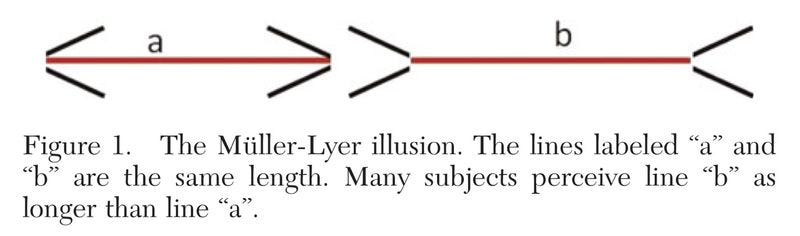

Going beyond differences in the pest and disease burden, a well-known 2010 article by Henrich, Heine, and Norenzayan documents extensive variation in human psychology study results depending on the population under study. In particular, they emphasize that the study populations in behavioral science research are overwhelmingly drawn from Western, Educated, Industrial, Rich, Democracies – so-called “WEIRD” countries (indeed, they point to one study showing nearly 70% of subjects in top psychology journals came from the USA alone!). They show that along many important dimensions, findings that are derived from WEIRD samples do not generalize to the broader human population. To take one example, Henrich and coauthors point to a 1966 cross-cultural study about the Müller-Lyer illusion, presented below. In this study, American undergraduates were more likely to perceive line b to be longer than line a, though the two are actually equal in their length. Others, such as San foragers of the Kalahari, tended to perceive the lines to be of equal length.

A 2020 retrospective by Apicella, Norenzayan, and Henrich, which looked back on the decade since the 2010 article, found samples drawn from WEIRD countries continued to dominate major journals, even as (infrequent) studies continue to find variation across countries is important.5

Finally, economics presents another domain where results in one country may not generalize to other. For example, Vivalt (2020) assesses the extent to which results from impact evaluations of economic development interventions generalize to new contexts. To do so, the author compiles a dataset of all results across hundreds of impact evaluations covering 20 types of development programs (as an example, one type of development program is conditional cash transfers). Vivalt summarizes the variation by intervention and by intervention-outcome, using meta-analysis methods, and documents that there exists significant variation for the same intervention-outcome across contexts, and that this variation is greater than variation that exists across other types of interventions, such as medical interventions.

Trust of evidence from different places

So the problems related to agriculture, disease, human psychology, and economic development are not universal but vary substantially from region to region. If research done in one region is more likely to be related to the problems of that region (and we argued it is, here), then that means the substantial concentration of research means a lot of problems are receiving very little research effort.

Decision-makers beliefs also matter. If people believe research done elsewhere isn’t applicable to their context, then that research is less likely to inform their decisions. That’s true even if the research actually is applicable, but people don’t believe it. And some papers indicate this potential concern is a real one.

Two recent papers attempt to isolate this mechanism in the context of program evaluation evidence. Vivalt et al (2023)and Nakajima (2021) both investigate how policymakers evaluate potentially relevant research with some experiments where they surveyed policymakers on their views about different hypothetical research papers. In both of these papers, the authors provide policymakers with evidence from sets of hypothetical impact evaluations, and ask them to rank or rate which evaluations they prefer. These hypothetical evaluations vary in their methodologies (RCTs versus observational studies), results, sample size, and, importantly for this post, the location of the study. The two studies find similar results: that policymakers tend to have a preference for studies conducted in similar settings to their own country, preferably their own country (Vivalt et al 2023).

Some related evidence from medical research has similar implications. Alsan et al (forthcoming) use a similar approach, a survey experiment, to assess how doctors and patients interpret the results of clinical trial data. In this study the authors provided profiles of hypothetical diabetes drugs, which included the drug’s mechanism of action and supporting clinical trials. In a supplementary experiment the authors asked respondents in the United States how much they trusted clinical trial results conducted in different countries. They found that respondents tended to be less confident about the effectiveness of a drug tested outside of the United States, and several respondents expressed concerns that the drug would not work in the same way due to biological factors, socioeconomic and environmental factors.

(As an aside, geography is of course not the only factor affecting which kinds of populations are underserved by research. The primary experiment in Alsan et al. (forthcoming) is actually about whether representation of different racial groups in clinical trials influences the likelihood that physicians would recommend that drug to their patients, and whether patients would adhere to the drug regimen. The study randomized the share of Black trial subjects and average drug efficacy in trials across drug profiles. Physicians were asked to indicate their intent to prescribe the drugs, and in a separate experiment, hypertension patients were asked their interest in novel therapies to treat hypertension that had been tested in trial sites with varying shares of Black participants. They found that physicians were more likely to state an intention to prescribe drugs that had been tested on representative samples, and that this effect was driven by doctors who routinely saw Black patients. As for the patients, Black respondents were more likely to state that a drug would work for them if the trial was representative.)

So another rationale for conducting research in different countries, besides that it may be necessary to do good science, is that the uptake of research depends partially on where it’s done.

So what?

So what does this mean in terms of actual outcomes in places with fewer researchers? Or, put differently, is there any evidence on the consequences of inappropriate research?

Moscona and Sastry (2022) provides one estimate of how much this matters, at least in the context of agriculture, in a multi-step analysis. First, they measure the extent to which countries’ crop and pest prevalence overlaps with that of more innovative locations, generating a ‘mismatch’ measure at the country-crop pair level. Second, they measure the role of this ‘mismatch’ in cross-border technology transfer between pairs of countries, showing that a mismatch between frontier countries matters the most for non-frontier countries. In concrete terms, countries with a pest-burden very different from that of places like the USA, tend to use fewer plant varieties originally bred in the USA. Third, they use a regression model which regresses crop output on mismatch with frontier countries, or countries that produce more research in those crop areas, holding constant country and crop fixed effects, to show that a mismatch with frontier countries negatively affects crop-specific output in other countries. For example, in their analysis, a country that is one standard deviation more “mismatched” in terms of how dissimilar its pest population is to the pest population of a center of agricultural R&D (for example, the USA), then the yield of crops tends to be about 0.4 standard deviations lower than peers.

To bolster their analysis, Moscona and Sastry go on to look at two events that shifted technological leadership, thereby shifting ‘mismatch’ patterns. They look at two major shifts: the Green Revolution in the 1960s and 1970s and the rise of US biotechnology in the 1990s. They exploit that these events shifted the center of innovation in certain types of agriculture to identify how changes in where innovation takes place affects productivity in countries with similar distributions of crops and pests. The results are consistent with the previous analysis: countries whose mismatch to frontier R&D declined (for example, because the Green revolution meant more R&D began to happen in countries with similar pest burdens) began to see greater gains in agricultural productivity. Similarly, countries whose mismatch increased (for example, because the biotech revolution shifted more research to the USA and away from countries with a more similar pest burden) began seeing lower gains.

Together, this body of evidence implies that the location of researchers really does matter at least for agricultural production, health outcomes, human psychology and policy and program implementation. That said, without more research on problems affecting places with fewer researchers it is difficult to tell what we are ‘missing’ and the extent to which research can solve problems in places with few researchers.

Thanks for reading! As always, if you want to chat about this post or innovation in generally, let’s grab a virtual coffee. Send me an email at matt.clancy@openphilanthropy.org and we’ll put something in the calendar.

See this argument for Matt’s views on the value of geographic clustering and research productivity.

See Comin and Mestieri (2014) and Verhoogen (forthcoming) for reviews of this literature.

While this is closely related to the idea of “inappropriate technology,” we are focusing specifically on the research that is upstream of technology. This focus allows us to isolate whether one country’s knowledge itself is ‘inappropriate’ for another country’s use, as opposed to the usefulness of knowledge embodied in physical capital.

More recently, and propelled by advances in sequencing technology, researchers have documented variation in population genetics around the world (Wang et al 2022).

One notable counter-example is the Many Labs 2 project, which in 2014 tested 28 different psychology results across a large number of country sites. This study found the extent to which a population was WEIRD was not significantly correlated with whether results replicated in one setting or another. Schimmelpfennig et al. (2023) argued that the study was insufficiently powered though to detect all but the largest variation in results across countries, among other critiques.