Like the rest of New Things Under the Sun, this article will be updated as the state of the academic literature evolves; you can read the latest version here.

It might seem obvious that we want bold new ideas in science. But in fact, really novel work poses a tradeoff. While novel ideas might sometimes be much better than the status quo, they might usually be much worse. Moreover, it is hard to assess the quality of novel ideas because they’re so, well, novel. Existing knowledge is not as applicable to sizing them up. For those reasons, it might be better to actually discourage novel ideas, and to instead encourage slow and incremental expansion of the knowledge frontier. Or maybe not.

For better or worse, the scientific community has settled on a set of norms that appear to encourage safe and creeping science, rather than risky and leaping science.

Blocking New Approaches

How might you identify whether a scientific community blocks new ideas? Merely observing the absence of new ideas isn’t enough; it could be that there just aren’t any new ideas worth pursuing. But what if you could identify sets of scientific fields that are quite similar to each other? Next, imagine you suddenly and randomly exile the dominant researchers in half the fields in order to stymie their ability to block new research. You could then compare what happens in fields that lost these dominant researchers to ones that didn’t. In particular, do new people enter these fields and pursue new and novel ideas? If so, that suggests there were new ideas worth investigating, but the dominant researchers in the field were blocking investigation of them. Alternatively, if new people enter these fields but basically continue doing the same thing as the previous generation, that suggests the opposite; the dominant researchers were not blocking anything and everyone in the field was just pursuing what they thought were the best ideas available at the time.

You can’t really do this experiment because you’re not going to get IRB approval to exile dominant researchers. It’s a bad thing to do. But Azoulay, Fons-Rosen, and Graff Zivin (2019) try to learn something from the sad fact that we live in a world where bad things do happen all the time. They begin by identifying a large set of superstar researchers in the life sciences. It turns out, 452 of these superstars died in the midst of an active research career. This is going to be analogous to the “exile” I described above in this hypothetical idealized experiment, though applying only to one prominent researcher per field, rather than the full set of dominant ones. (Note – as I’ll discuss later, the findings discussed below should not be interpreted as implying these superstars were behaving unethically or something. It’s more complicated than that.)

Azoulay, Fons-Rosen and Graff Zivin next identify a large set of small microfields in which these deceased researchers were active in the five years before their deaths. Each of these microfields consists of about 75 papers on average. For each of these microfields, they find sets of matching microfields that are similar in various ways. Most importantly, these matched microfields are ones that include active participation by a superstar researcher who did not pass away. The idea is to compare what happens in a microfield where a superstar researcher passed away to what happens in similar microfields where a superstar researcher did not pass away.

The first thing that happens is new people do begin to enter the field. Following the death of a superstar researcher, there is a slight increase in the number of new articles published in that microfield by people other than the superstar, as compared to microfields where the superstar lives. These new papers are disproportionately written by people who were not previously publishing in the microfield, rather than by people who were active publishing more.

Most relevant for our inquiry today, these new researchers appear to bring new ideas and new approaches with them. They don’t cite the existing work in this microfield, including the work of the superstar. And their work is assigned a greater share of novel scientific keywords from the MeSH lexicon (a standardized classification system used in biomedicine). Also important – the new work tends to be well cited. There’s a greater influx of highly cited work than low-citation work.

Can we say anything more about the specific mechanisms going on here?

Well, we can say it’s not simply a case of these dominant researchers vetoing grant proposals and publication from rival researchers. Only a tiny fraction of them were in positions of formal academic power, such as sitting on NIH grant review committees or serving as journal editors, when they passed away. So what else could it be? I’m going to tentatively suggest it’s about the dominance of the ideas the superstar researcher promoted. We’ll return to this notion later. But first, let’s look at some evidence about how scientists resist the influence of new ideas in a field.

Citing novel work

Let’s start at the end. Suppose we’ve recently published an article on an unusual new idea. How is it received by the scientific community?

Wang, Veugelers, and Stephan (2017) look at academic papers published across most scientific fields in 2001 and devise a way to try and measure how novel these papers are. They then look at how novel papers are subsequently cited (or not) by different groups.

To measure the novelty of a paper, they rely on the notion that novelty is about combining pre-existing ideas in new and unexpected ways. They use the references cited as a proxy for the sources of ideas that a paper grapples with, and look for papers that cite pairs of journals that have not previously been jointly cited. The 11.5% of papers with at least one pair of journals never previously cited together in the same paper are called “moderately” novel in their paper.

But they also go a bit further. Some new combinations are more unexpected than others. For example, it might be that I am the first to cite a paper from a monetary policy journal and an international trade journal. That’s kind of creative, maybe. But it would be really weird if I cited a paper from a monetary policy journal and a cell biology journal. Wang, Veuglers, and Stephan, create a new category for “highly” novel papers, which cite a pair of journals that have never been cited together in the past, and also are not even in the same neighborhood. Here, we mean journals that are not well “connected” by some other pair of journals.

For example, if journals A and B have never been cited together that’s kind of novel. But suppose A and C have been jointly cited, and also B and C have also been jointly cited. That means there is a journal that acts as a kind of bridge from A to B, even if they’ve never actually been paired themselves. More novel would be a pair of journals X and Y that don’t even share this kind of bridge (or at least, there are fewer bridges than usual). Wang, Veugelers, and Stephan designate the 1% of all papers that make the most unusual novel connections, defined in this way, as highly novel.

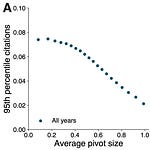

Echoing what I said at the beginning, highly novel work seems desirable; it’s much more likely to become one of the top 1% most cited papers in its field.

But importantly, this isn’t really because people in the field quickly recognize it’s a breakthrough. In fact, this recognition disproportionately comes from other fields, and with a significant delay. Restricting attention to citations received from within the same field, novelty isn’t rewarded.

And even looking across all fields, these citations come late. Restricting attention to citations received in just the first three years, again, novelty isn’t really rewarded.

Moreover, Wang, Veugelers, and Stephan also show moderately and highly novel papers are less likely to be published in the best journals (as measured by the journal impact factor). That suggests to me that highly novel work is also less likely to be published at all, and so the results we’re seeing might be over-estimating the citations received, since they rely on novel work that was good enough to clear skeptical peer review.

From Citations to Grants

All this is important because the reception of your prior work plays a role in your ability to do future work. Li (2017) provides some evidence on this by looking at about 100,000 NIH grant applications filed between 1992 and 2005. Funding for research from the NIH is limited - only 20% of applicants succeed - and so the NIH tries to prioritize funding projects that will have the biggest impact. The trouble here is that assessing the quality of frontier research is hard; those with enough knowledge to serve as good evaluators tends to be people who are also using that knowledge to actually do frontier research. Accordingly, grant applications are assessed by a review committee drawn from other active researchers in the area. They’re looking for proposals they think will generate new and significant scientific findings, taking into account the proposed research questions, methodologies, and capability of the researcher to follow through.

But what constitutes the most impactful work? Li begins by showing a proposal is more likely to be funded if the reviewer has previously cited the applicant’s prior work. Across applicants who are judged by the same committee, and have similar numbers of prior citations and grant awards and similar coarse demographic characteristics, the probability of funding increases by 3.3 percentage points for every (permanent) committee member who has cited the applicant’s prior work. That’s not too surprising on it’s own; if reviewers liked the applicant’s prior work enough to cite it, they probably like proposals for more work in the same vein.

But this result does imply something worrying. Proposals are not assigned to reviewers at random. Instead, they are usually given to whoever has the closest and most relevant expertise. That means it’s likely that the reviewers of a grant application will be researchers drawn from the applicant’s own scientific field. And if the applicant has a history of doing highly novel research, Wang, Veugelers, and Stephan’s work suggests these reviewers are less likely to have previously cited it. And Li (2017) suggests that means these applicants will face a tougher time getting funding.

Evidence of less indirect bias against novel ideas come from Boudreau et al. (2016). They convinced a university to run an experiment in grant-making for them, getting 150 applicants to submit a short proposal for a research project on endocrine-related disease, dangling a $2,500 grant for successful applicants and higher probability of winning a second stage grant with significantly more money available. Boudreau and coauthors use a similar strategy as Wang, Veugelers, and Stephan to measure the novelty of these proposals, looking for the number of novel combinations of scientific keywords (again, from the MeSH lexicon) attached to the grant applications. While a little novelty is desirable, overall reviewers looked less favorably on more novel proposals.

Where does anti-novelty bias come from?

Scientists are curious folk; why would they be biased against novel ideas?

Li (2017) and Boudreau et al. (2016) both find some evidence that this bias stems less from malice than from a greater ability of reviewers to identify high quality proposals when they are closer to their existing knowledge. In other words, when proposals are less novel and closer to existing work, reviewers have an easier time separating the really good proposals from the mediocre and poor ones. But when proposals are further from existing work, it’s hard for reviewers to separate the good from the bad, and all such proposals are more likely to be judged as just average. But when grant making is really competitive, only applications judged as being really good might be funded, leaving the hard-to-evaluate novel proposals persistently underfunded.

To show reviewers are better able to identify the quality of less novel papers, Li (2017) and Boudreau et al. (2016) need some kind of “objective” measure of the quality of grant proposals. This isn’t possible, but each paper uses an interesting proxy. In Boudreau et al. (2016)’s experiment with grant-making, they created a way to measure the reviewer’s intellectual “distance” from the proposal, based on the MeSH keywords describing the proposal and MeSH keywords attached to the reviewer’s prior published work. Then, rather than assigning each grant application to the reviewers with the most relevant prior expertise, they randomized who the reviewers were. Each proposal was reviewed by 15 reviewers, and Boudreau and coauthors compare the score assigned by the reviewer who is “closest” to the proposal (i.e., has the most relevant domain expertise) with the average of all the other reviewers. In general, they find there was more disagreement between these expert reviewers and everyone else about which proposals should get high scores rather than low ones. Those with close expertise seemed to know “something” that led them to more divergent conclusions about high scoring proposals.

I think what might be going on is even more clearly illustrated in Li (2017). Li exploits an unusual feature of the grant process to try and find an “objective” measure of NIH grant proposal quality. In the process of preparing an NIH grant proposal, researchers do a lot of work that is publishable. Indeed, Li argues for the period she studies, the arms race in NIH application quality had progressed to the point where the majority of the application was actually based on work that was nearly completed and ready for publication. This means a lot of the material that was in the grant application is already barreling towards publication, whether or not the proposal is funded (presumably the proposal builds on and extends this work). Li tries to identify this proposal material that is spun out into academic articles by looking at work from the lab that is published in a short interval after the proposal is submitted but before new experiments could plausibly have been completed, and which is about the same topic as the grant application (as judged by shared keywords). She then looks at how well this “spinoff” work gets cited. This provides a proxy for the quality of the grant applications, both those that are ultimately funded and those that are not.

The takeaway is well summarized in the following figure. On the vertical axis, we have the probability an application gets funded, after taking into account the applicant’s prior record (how much they’ve been cited, how many grants they’ve won, their demographic and educational background, and so on). On the horizontal axis we’ve got an estimate of the number of citations spinoff work from the grant application receives. The scatterplot drifts up and to the right, indicating that grants that are higher quality (i.e., spinoff publications get more citations) are more likely to get funded. But Li divides these into two groups. In red, we have applications that have also been cited by a permanent member of the grant review committee, and in blue we have ones that weren’t. Note for the red dots there is a stronger relationship between quality and funding than for the blue. If the reviewers have previously cited your work, they are better at discerning high and low quality proposals, compared to when they have not cited it.

One way to interpret this is that committee members are better able to judge quality for work that is closely related to their own work. If you work on similar stuff as a committee member, you are more likely to be cited by them. If you go on to propose a bad research project, then it doesn’t really help you that the committee member has previously cited you. You get rejected, and your spinoff publications receive few citations. On the other hand, if you propose a great idea that will garner lots of citations, it’s probability of getting funded isn’t much better than your bad idea if no one on the committee is familiar with your work, but it is much more likely to be funded if they are.

Uncertainty + Competition = Novelty Penalty?

To sum up, while I don’t doubt scientists have biases towards their own chosen fields of study (how could they not? They chose to study it because they liked it!), I suspect at least part of the bias against new ideas comes from a more subtle process. When assessing a new idea, we can make more confident assessments when the idea is closer to our own expertise. All else equal, this gives an edge to less novel ideas when we’re ranking ideas from most to least promising. But in a world with scarce scientific resources (whether those be funds or attention), we can’t give resources to every idea that merits them. Instead, we start at the top and work our way down. But that can mean valuable novel ideas are less likely to get resources than less valuable but less novel ideas.

(As an aside, this bias against novel work might be a reason why, paradoxically, novel work is actually more likely to be highly cited. If you know there’s a bias against novelty, then you also might believe it’s not worth working on novel stuff unless it has a decent shot of ultimately being really important)

(As another aside, the anti-novelty bias is probably an additional reason we should fund more R&D; maybe it’ll increase out willingness to fund novel stuff when competition for resources isn’t so fierce. End asides.)

To close, I think we can see something like this dynamic in Azoulay, Fons-Rosen, and Graff-Zivin’s study of the impact of superstar deaths. Recall again, that it’s probably not the case that superstar’s are blocking rival research by personally denying grants or publications, since they weren’t actually sitting in positions of authority when they died in most cases. In the paper, they actually go through the effort of dropping everyone in one of these positions of authority from the analysis, in order to show it doesn’t drive their results. But even if they aren’t personally blocking research, their ideas might be.

For one, the ability of researchers to enter with new ideas seems weaker when the superstar’s former collaborators retain positions of influence, sitting on more NIH grant review committees and editorships (though the latter can only be assessed really imperfectly). Second, Azoulay, Fons-Rosen, and Graff-Zivin provide some suggestive evidence that when the microfield has more firmly consolidated itself around the ideas promoted by the superstar, it remains hard for newcomers to enter even after the superstar has passed away. They attempt to measure this by looking at measures of closely connected coauthorship networks are, how intensely the field cites its own work, and how similar papers in the field are to each other according to an algorithm.

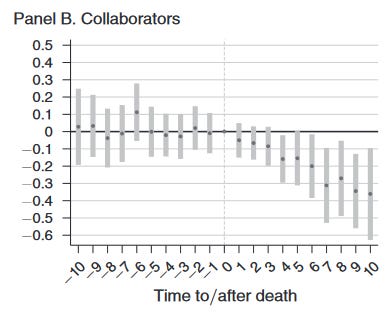

But when a field is not highly consolidated around the superstar’s ideas and there is still some active debate about the way a microfield might go, there appears to be a big effect on having a superstar active in the field prematurely pass away. After the superstar researcher passes away, clearly they aren’t around anymore to publish, thereby expanding and clarifying the idea. And indeed, the entry of newcomers is strongest in fields where the superstar had an outsized role in the microfield, as measured by the share of publications, citations, and research funding they garnered. But the death of a superstar has additional knock-on effects that might further shake the dominance of their ideas. Even the former collaborators of the superstar publish significantly less work after the superstar dies. And this effect is stronger for collaborators who work on more similar topics as the superstar.

Taken together, I think it suggests that when a superstar prematurely dies, their ideas may fade in importance and salience, as compared to fields where the superstar stuck around. That could create space for alternative approaches to get resources.

To close, I want to be sure and caution against a sort of fatalism. This isn’t absolute, it’s probabilistic. Novel ideas to get published, they can attract attention, and old paradigms do fall. It just happens less often than we might like. Research has an inertia that is real, but not insurmountable.

Thanks for reading! If you liked this, you might also enjoy this article from early 2021 talking about how these forces of conservatism can be overcome, using the credibility revolution in economics (for which another set of Nobel prizes was just awarded) as an illustrative example: How a field fixes itself - the applied turn in economics

For other related readings, follow the links listed at the bottom of the article’s page on New Things Under the Sun (.com).

Share this post